In this NVIDIA vGPU Troubleshooting Guide, I’ll help show you how to troubleshoot NVIDIA vGPU issues on VMware platforms, including VMware Horizon and VMware Tanzu. This guide applies to the full vGPU platform, so it’s relevant for VDI, AI, ML, and Kubernetes workloads, as well other virtualization platforms.

This guide will provide common troubleshooting methods, along with common issues and problems associated with NVIDIA vGPU as well as their fixes.

Please note, there are numerous other additional methods available to troubleshoot your NVIDIA vGPU deployment, including 3rd party tools. This is a general document provided as a means to get started learning how to troubleshoot vGPU.

NVIDIA vGPU

NVIDIA vGPU is a technology platform that includes a product line of GPUs that provide virtualized GPUs (vGPU) for Virtualization environments. Using a vGPU, you can essentially “slice” up a physical GPU and distribute Virtual GPUs to a number of Virtual Machines and/or Kubernetes containers.

These virtual machines and containers can then use these vGPU’s to provide accelerated workloads including VDI (Virtual Desktop Infrastructure), AI (Artificial Intelligence), and ML (Machine Learning).

While the solution works beautifully, when deployed incorrectly or if the solution isn’t maintained, issues can occur requiring troubleshooting and remediation.

At the end of this blog post, you’ll find some additional (external) links and resources, which will assist further in troubleshooting.

Troubleshooting

Below, you’ll find a list of my most commonly used troubleshooting methods.

Please click on an item below which will take you directly to the section in this post.

- Using “nvidia-smi”

- Virtual Machine log files

- ESXi Host log files

- Using “dxdiag” in the guest VM

- Using the “VMware Horizon Performance Monitor”

- NVIDIA vGPU Known Issues

Common Problems

Below is a list of problems and issues I commonly see customers experience or struggle with in their vGPU enabled VMware environments.

Please click on an item below which will take you directly to the section in this post.

- XID Error Codes

- vGPU Licensing

- vGPU Type (vGPU Profile) mismatch

- vGPU or Passthrough with 16GB+ of Video RAM Memory

- vGPU VM Freezes during VMware vMotion

- “ERR!” State

- vGPU Driver Mismatch

- Upgrading NVIDIA vGPU

- VMware Horizon Black Screen

- VM High CPU RDY (CPU Ready)

- vGPU Profiles Missing from VMware Horizon

- Issues with the VMware Horizon Indirect Display Driver

vGPU Troubleshooting

Using “nvidia-smi”

The NVIDIA vGPU driver comes with a utility called the “NVIDIA System Management Interface”. This CLI program allows you to monitor, manage, and query your NVIDIA vGPU (including non-vGPU GPUs).

Simply running the command with no switches or flags, allow you to query and pull basic information on your vGPU, or multiple vGPUs.

For a list of available switches, you can run: “nvidia-smi -h”.

Running “nvidia-smi” on the ESXi Host

To use “nvidia-smi” on your VMware ESXi host, you’ll need to SSH in and/or enable console access.

When you launch “nvidia-smi” on the ESXi host, you’ll see information on the physical GPU, as well as the VM instances that are consuming a virtual GPU (vGPU). This usage will also provide information like fan speeds, temperatures, power usage and GPU utilization.

[root@ESXi-Host:~] nvidia-smi

Sat Mar 4 21:26:05 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.85.07 Driver Version: 525.85.07 CUDA Version: N/A |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A2 On | 00000000:04:00.0 Off | Off |

| 0% 36C P8 8W / 60W | 7808MiB / 16380MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 2108966 C+G VM-WS02 3904MiB |

| 0 N/A N/A 2108989 C+G VM-WS01 3904MiB |

+-----------------------------------------------------------------------------+This will aid with troubleshooting potential issues specific to the host or the VM. The following pieces of information are helpful:

- Driver Version

- GPU Fan and Temperature Information

- Power Usage

- GPU Utilization (GPU-Util)

- ECC Information and Error Count

- Virtual Machine VMs assigned a vGPU

- vGPU Type (C+G means Compute and Graphics)

Additionally, instead of running once, you can issue “nvidia-smi -l x” replacing “x” with the number of seconds you’d like it to auto-loop and refresh.

Example:

nvidia-smi -l 3The above would refresh and loop “nvidia-smi” every 3 seconds.

For vGPU specific information from the ESXi host, you can run:

nvidia-smi vgpuroot@ESXi-Host:~] nvidia-smi vgpu

Mon Mar 6 11:47:44 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.85.07 Driver Version: 525.85.07 |

|---------------------------------+------------------------------+------------+

| GPU Name | Bus-Id | GPU-Util |

| vGPU ID Name | VM ID VM Name | vGPU-Util |

|=================================+==============================+============|

| 0 NVIDIA A2 | 00000000:04:00.0 | 0% |

| 3251713382 NVIDIA A2-4Q | 2321577 VMWS01 | 0% |

+---------------------------------+------------------------------+------------+This command shows information on the vGPU instances currently provisioned.

There are also a number of switches you can throw at this to get even more information on vGPU including scheduling, vGPU types, accounting, and more. Run the following command to view the switches:

nvidia-smi vgpu -hAnother common switch I use on the ESXi host with vGPU for troubleshooting is: “nvidia-smi -q”, which provides lots of information on the physical GPU in the host:

[root@ESXi-HOST:~] nvidia-smi -q

==============NVSMI LOG==============

Timestamp : Sat Mar 4 21:26:18 2023

Driver Version : 525.85.07

CUDA Version : Not Found

vGPU Driver Capability

Heterogenous Multi-vGPU : Supported

Attached GPUs : 1

GPU 00000000:04:00.0

Product Name : NVIDIA A2

Product Brand : NVIDIA

Product Architecture : Ampere

Display Mode : Enabled

Display Active : Disabled

Persistence Mode : Enabled

vGPU Device Capability

Fractional Multi-vGPU : Not Supported

Heterogeneous Time-Slice Profiles : Supported

Heterogeneous Time-Slice Sizes : Not Supported

MIG Mode

Current : N/A

Pending : N/A

Accounting Mode : Enabled

Accounting Mode Buffer Size : 4000

Driver Model

Current : N/A

Pending : N/A

Serial Number : XXXN0TY0SERIALZXXX

GPU UUID : GPU-de23234-3450-6456-e12d-bfekgje82743a

Minor Number : 0

VBIOS Version : 94.07.5B.00.92

MultiGPU Board : No

Board ID : 0x400

Board Part Number : XXX-XXXXX-XXXX-XXX

GPU Part Number : XXXX-XXX-XX

Module ID : 1

Inforom Version

Image Version : G179.0220.00.01

OEM Object : 2.0

ECC Object : 6.16

Power Management Object : N/A

GPU Operation Mode

Current : N/A

Pending : N/A

GSP Firmware Version : N/A

GPU Virtualization Mode

Virtualization Mode : Host VGPU

Host VGPU Mode : SR-IOV

IBMNPU

Relaxed Ordering Mode : N/A

PCI

Bus : 0x04

Device : 0x00

Domain : 0x0000

Device Id : 0x25B610DE

Bus Id : 00000000:04:00.0

Sub System Id : 0x157E10DE

GPU Link Info

PCIe Generation

Max : 3

Current : 1

Device Current : 1

Device Max : 4

Host Max : N/A

Link Width

Max : 16x

Current : 8x

Bridge Chip

Type : N/A

Firmware : N/A

Replays Since Reset : 0

Replay Number Rollovers : 0

Tx Throughput : 0 KB/s

Rx Throughput : 0 KB/s

Atomic Caps Inbound : N/A

Atomic Caps Outbound : N/A

Fan Speed : 0 %

Performance State : P8

Clocks Throttle Reasons

Idle : Active

Applications Clocks Setting : Not Active

SW Power Cap : Not Active

HW Slowdown : Not Active

HW Thermal Slowdown : Not Active

HW Power Brake Slowdown : Not Active

Sync Boost : Not Active

SW Thermal Slowdown : Not Active

Display Clock Setting : Not Active

FB Memory Usage

Total : 16380 MiB

Reserved : 264 MiB

Used : 7808 MiB

Free : 8306 MiB

BAR1 Memory Usage

Total : 16384 MiB

Used : 1 MiB

Free : 16383 MiB

Compute Mode : Default

Utilization

Gpu : 0 %

Memory : 0 %

Encoder : 0 %

Decoder : 0 %

Encoder Stats

Active Sessions : 0

Average FPS : 0

Average Latency : 0

FBC Stats

Active Sessions : 0

Average FPS : 0

Average Latency : 0

Ecc Mode

Current : Disabled

Pending : Disabled

ECC Errors

Volatile

SRAM Correctable : N/A

SRAM Uncorrectable : N/A

DRAM Correctable : N/A

DRAM Uncorrectable : N/A

Aggregate

SRAM Correctable : N/A

SRAM Uncorrectable : N/A

DRAM Correctable : N/A

DRAM Uncorrectable : N/A

Retired Pages

Single Bit ECC : N/A

Double Bit ECC : N/A

Pending Page Blacklist : N/A

Remapped Rows

Correctable Error : 0

Uncorrectable Error : 0

Pending : No

Remapping Failure Occurred : No

Bank Remap Availability Histogram

Max : 64 bank(s)

High : 0 bank(s)

Partial : 0 bank(s)

Low : 0 bank(s)

None : 0 bank(s)

Temperature

GPU Current Temp : 37 C

GPU T.Limit Temp : N/A

GPU Shutdown Temp : 96 C

GPU Slowdown Temp : 93 C

GPU Max Operating Temp : 86 C

GPU Target Temperature : N/A

Memory Current Temp : N/A

Memory Max Operating Temp : N/A

Power Readings

Power Management : Supported

Power Draw : 8.82 W

Power Limit : 60.00 W

Default Power Limit : 60.00 W

Enforced Power Limit : 60.00 W

Min Power Limit : 35.00 W

Max Power Limit : 60.00 W

Clocks

Graphics : 210 MHz

SM : 210 MHz

Memory : 405 MHz

Video : 795 MHz

Applications Clocks

Graphics : 1770 MHz

Memory : 6251 MHz

Default Applications Clocks

Graphics : 1770 MHz

Memory : 6251 MHz

Deferred Clocks

Memory : N/A

Max Clocks

Graphics : 1770 MHz

SM : 1770 MHz

Memory : 6251 MHz

Video : 1650 MHz

Max Customer Boost Clocks

Graphics : 1770 MHz

Clock Policy

Auto Boost : N/A

Auto Boost Default : N/A

Voltage

Graphics : 650.000 mV

Fabric

State : N/A

Status : N/A

Processes

GPU instance ID : N/A

Compute instance ID : N/A

Process ID : 2108966

Type : C+G

Name : VM-WS02

Used GPU Memory : 3904 MiB

GPU instance ID : N/A

Compute instance ID : N/A

Process ID : 2108989

Type : C+G

Name : VM-WS01

Used GPU Memory : 3904 MiBAs you can see, you can pull quite a bit of information in detail from the vGPU, as well as the VM processes.

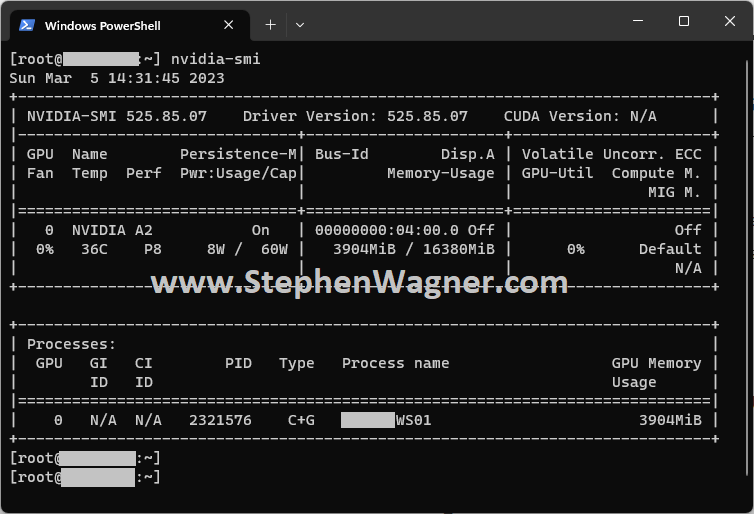

Running “nvidia-smi” on the VM Guest

You can also run “nvidia-smi” inside of the guest VM, which will provide you information on the vGPU instance that is being provided to that specific VM, along with information on the guest VM’s processes that are utilizing the GPU.

This is helpful for providing information on the guest VM’s usage of the vGPU instance, as well as processes that require GPU usage.

Virtual Machine log files

Each Virtual Machine has a “vmware.log” file inside of the VM’s folder on the datastore.

To identify logging events pertaining to NVIDIA vGPU, you can search for the “vmiop” string inside of the vmware.log file.

Example:

cat /vmfs/volumes/DATASTORE/VirtualMachineName/vmware.log | grep -i vmiopThe above will read out any lines inside of the log that have the “vmiop” string inside of them. The “-i” flag instructs grep to ignore case sensitivity.

This logs provide initialization information, licensing information, as well as XID error codes and faults.

ESXi Host log files

Additionally, since the ESXi host is running the vGPU Host Driver (vGPU Manager), it also has logs that pertain and assist with vGPU troubleshooting.

Some commands you can run are:

cat /var/log/vmkernel.log | grep -i vmiopcat /var/log/vmkernel.log | grep -i nvrmcat /var/log/vmkernel.log | grep -i nvidiaThe above commands will pull NVIDIA vGPU related log items from the ESXi log files.

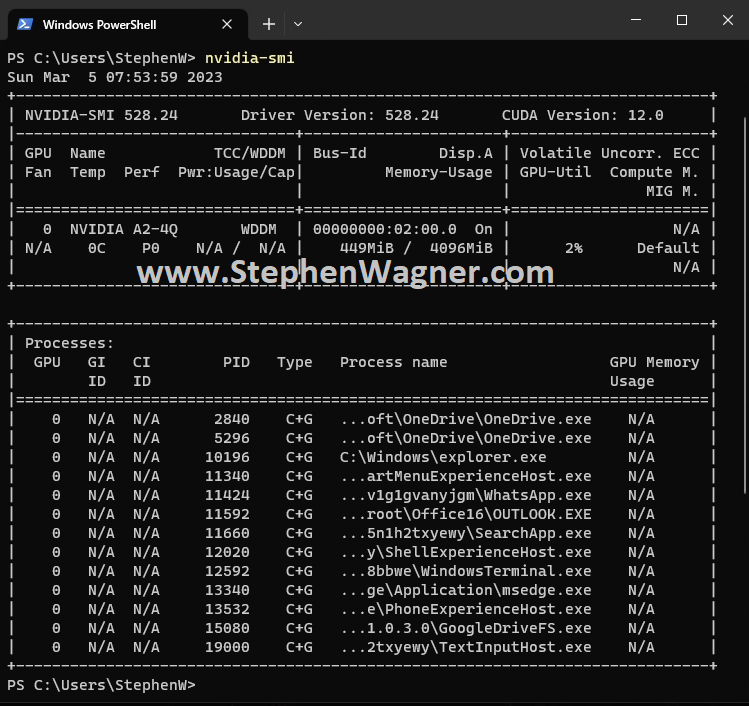

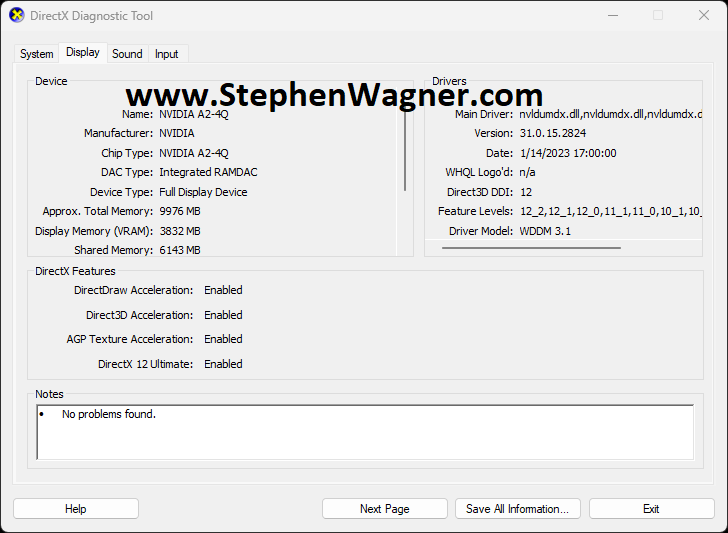

Using “dxdiag” in the guest VM

Microsoft has a tool called “dxdiag” which provides diagnostic infromation for testing and troubleshooting video (and sound) with DirectX.

I find this tool very handy for quickly verifying

As you can see:

- DirectDraw Acceleration: Enabled

- Direct3D Acceleration: Enabled

- AGP Texture Acceleration: Enabled

- DirectX 12 Ultimate: Enabled

The above show that hardware acceleration is fully functioning with DirectX. This is a indicator that things are generally working as expected. If you have a vGPU and one of the first three is showing as disabled, then you have a problem that requires troubleshooting. Additionally, if you do not see your vGPU card, then you have a problem that requires troubleshooting.

Please Note: You may not see “DirectX 12 Ultimate” as this is related to licensing.

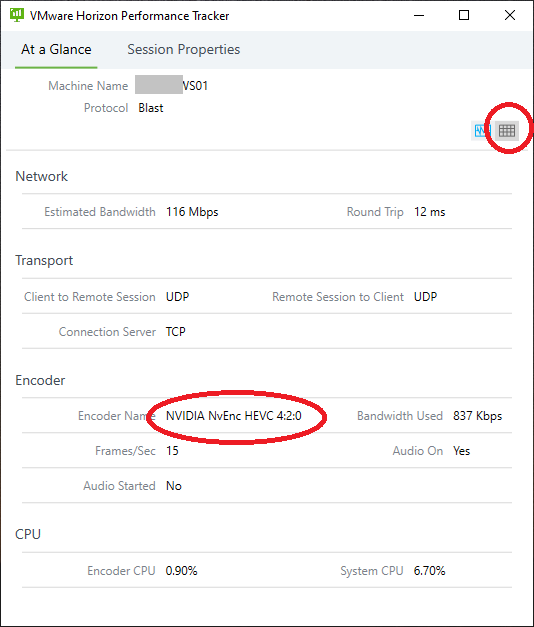

Using the “VMware Horizon Performance Monitor”

The VMware Horizon Performance Monitor, is a great tool that can be installed by the VMware Horizon Agent, that allows you to pull information (stats, connection information, etc) for the session. Please note that this is not installed by default, and must be selected when running the Horizon Agent installer.

When it comes to troubleshooting vGPU, it’s handy to use this too to confirm you’re getting H.264 or H.265/HEVC offload from the vGPU instance, and also get information on how many FPS (Frames Per Second) you’re getting from the session.

Once opening, you’ll change the view above using the specified selector, and you can see what the “Encoder Name” is being used to encode the session.

Examples of GPU Offload “Encoder Name” types:

- NVIDIA NvEnc HEVC 4:2:0 – This is using the vGPU offload using HEVC

- NVIDIA NvEnc HEVC 4:4:4 – This is using the vGPU offload using HEVC high color accuracy

- NVIDIA NvEnc H264 4:2:0 – This is using the vGPU offload using H.264

- NVIDIA NvEnc H264 4:4:4 – This is using the vGPU offload using H.264 high color accuracy

Examples of Software (CPU) Session “Encoder Name” types:

- BlastCodec – New VMware Horizon “Blast Codec”

- h264 4:2:0 – Software CPU encoded h.264

If you’re seeing “NVIDIA NvEnc” in the encoder name, then the encoding is being offloaded to the GPU resulting in optimum performance. If you don’t see it, it’s most likely using the CPU for encoding, which is not optimal if you have a vGPU, and requires further troubleshooting.

NVIDIA vGPU Known Issues

Depending on the version of vGPU that you are running, there can be “known issues”.

When viewing the NVIDIA vGPU Documentation, you can view known issues, and fixes that NVIDIA may provide. Please make sure to reference the documentation specific to the version you’re running and/or the version that fixes the issues you’re experiencing.

vGPU Common Problems

There are a number of common problems that I come across when I’m contacted to assist with vGPU deployments.

Please see below for some of the most common issues I experience, along with their applicable fix/workaround.

XID Error Codes

When viewing your Virtual Machine VM or ESXi log file, and experiencing an XID error or XID fault, you can usually look up the error codes.

Typically, vGPU errors will provide an “XiD Error” code, which can be looked up on NVIDIA’s Xid Messages page here: XID Errors :: GPU Deployment and Management Documentation (nvidia.com).

The table on this page allows you to lookup the XID code, find the cause, and also provides information if the issue is realted to “HW Error” (Hardware Error), “Driver Error”, “User App Error”, “System Memory Corruption”, “Bus Error”, “Thermal Issue”, or “FB Corruption”.

An example:

2023-02-26T23:33:24.396Z Er(02) vthread-2108265 - vmiop_log: (0x0): XID 45 detected on physical_chid:0x60f, guest_chid:0xf

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): Timeout occurred, reset initiated.

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x52445456 0x00e207e8 0x000001cc 0x00000001

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x00989680 0x00000000 0x000001bb 0x0000000f

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x00000100 0x00000000 0x0000115e 0x00000000

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x00000000 0x00000000 0x00001600 0x00000000

2023-02-26T23:33:36.023Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x00002214 0x00000000 0x00000000 0x00000000

2023-02-26T23:33:36.024Z Er(02) vthread-2108266 - vmiop_log: (0x0): TDR_DUMP:0x64726148 0x00736964 0x00000000 0x00000000

2023-02-26T23:33:36.068Z Er(02) vthread-2108265 - vmiop_log: (0x0): XID 43 detected on physical_chid:0x600, guest_chid:0x0One can see XID code 45, as well as XID code 43, which after looking up on NVIDIA’s document, states:

- XID 43 – GPU stopped processing

- Possible Cause: Driver Error

- Possible Cause: User App Error

- XID 45 – Preemptive cleanup, due to previous errors — Most likely to see when running multiple cuda applications and hitting a DBE

- Possible Cause: Driver Error

In the situation above, one can deduce that the issue is either Driver Error, Application Error, or a combination of both. In this specific case, you could try changing drivers to troubleshoot.

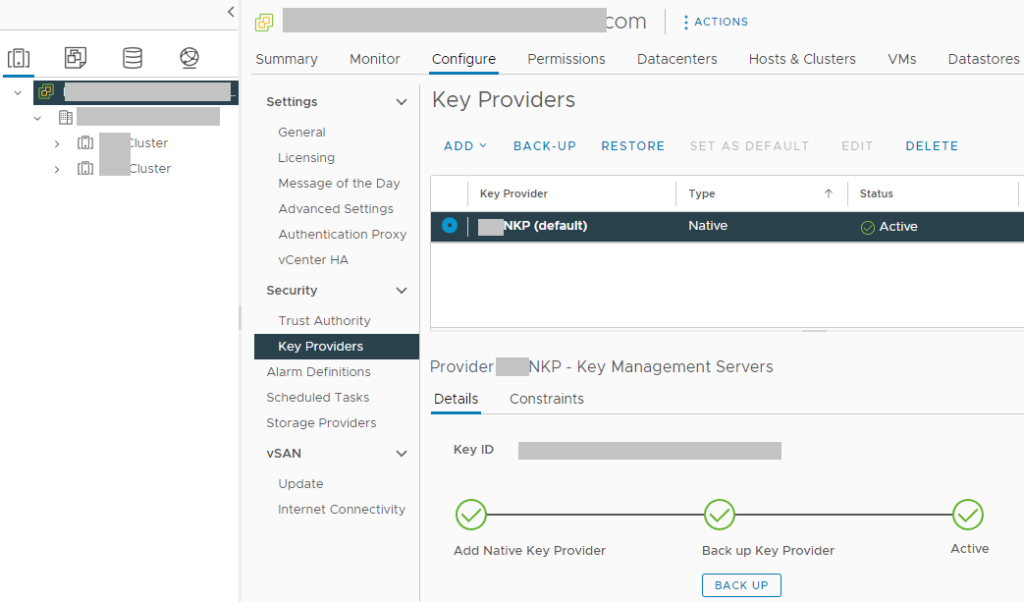

vGPU Licensing

You may experience issues in your vGPU deployment due to licensing issues. Depending on how you have you environment configured, you may be running in an unlicensed mode and not be aware.

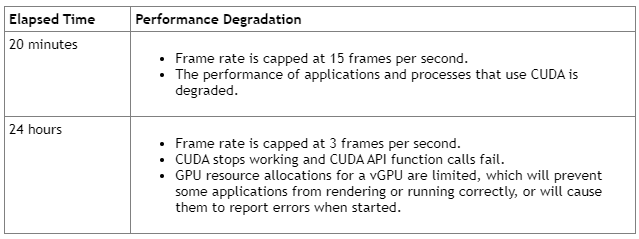

In the event that the vGPU driver cannot obtain a valid license, it will run for 20 minutes with full capabilities. After that the performance and functionality will start to degrade. After 24 hours it will degrade even further.

Some symptoms of issues experienced when unlicensed:

- Users experiencing laggy VDI sessions

- Performance issues

- Frames per Second (FPS) limited to 15 fps or 3 fps

- Applications using OpenCL, CUDA, or other accelerated APIs fail

Additionally, some error messages and event logs may occur:

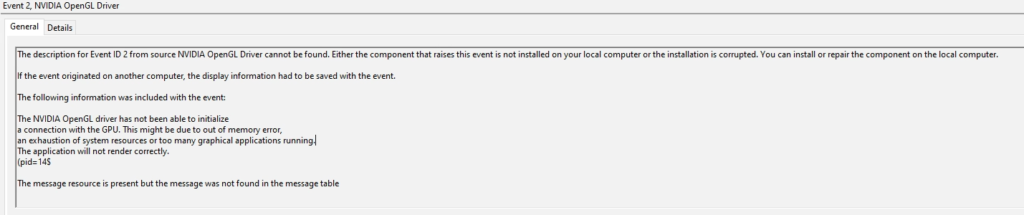

- Event ID 2, “NVIDIA OpenGL Driver” – “The NVIDIA OpenGL driver has not been able to initialize a connection with the GPU.”

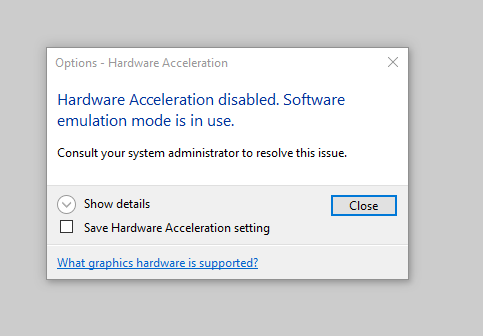

- AutoCAD/Revit – “Hardware Acceleration is disabled. Software emulation mode is in use.”

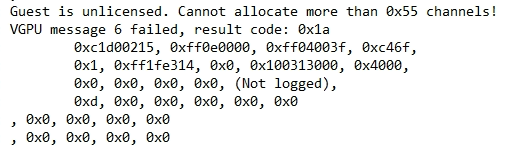

- “Guest is unlicensed”

Please see below for screenshots of said errors:

Additonally, when looking at the Virtual Machine VM vmware.log (inside of the VM’s folder on the ESXi datastore), you may see:

Guest is unlicensed. Cannot allocate more than 0x55 channels!

VGPU message 6 failed, result code: 0x1aIf this occurs, you’ll need to troubleshoot your vGPU licensing and resolve any issues occurring.

vGPU Type (vGPU Profile) mismatch

When using the default (“time-sliced”) vGPU deployment method, only a single vGPU type can be used on virtual machines or containers per physical GPU. Essentially all VMs or containers utilizing the physical GPU must use the same vGPU type.

If the physical GPU card has multiple GPUs (GPU chips), then a different type can be used on each physical GPU chip on the same card. 2 x GPUs on a single card = 2 different vGPU types.

Additionally, if you have multiple cards inside of a single host, the number of vGPU types you can deployed is based off the total number of GPUs across the total number of cards in your host.

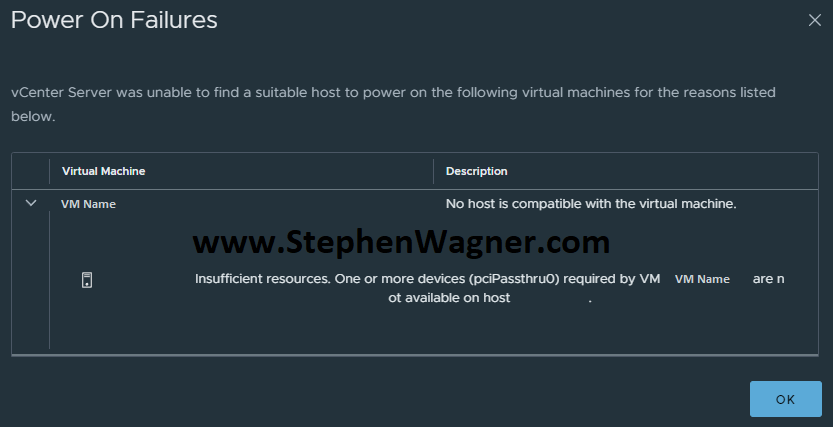

If you configure multiple vGPU types and cannot support it, you will have issues starting VMs, as shown below:

The error reads as follows:

Power On Failures

vCenter Server was unable to find a suitable host to power on the following virtual machines for the reasons listed below.

Insufficient resources. One or more devices (pciPassthru0) required by VM VDIWS01 are not available on host ESXi-Host.Additionally, if provisioning via VMware Horizon, you may see: “NVIDIA GRID vGPU Support has detected a mismatch with the supported vGPUs”

Note: If you are using MIG (Multi Instance GPU), this does not apply as different MIG types can be applied to VMs from the same card/GPU.

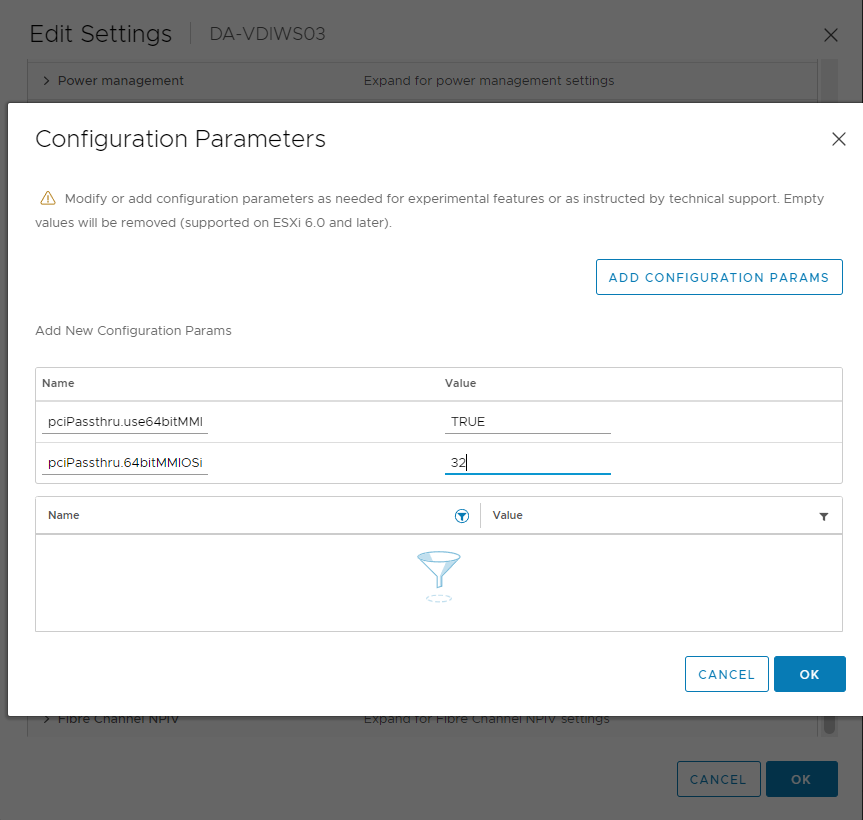

vGPU or Passthrough with 16GB+ of Video RAM Memory

When attaching a vGPU to a VM, or passing through a GPU to a VM, with 16GB or more of Video RAM (Framebuffer memory), you may run in to a situation where the VM will not boot.

This is because the VM cannot map that large of memory space to be accesible for use.

Please see my blog post GPU or vGPU Passthrough with 16GB+ of video memory, for more information as well as the fix.

vGPU VM Freezes during VMware vMotion

Your users may report issues where their VDI guest VM freezes for a period of time during use. This could be caused due to VMware vMotion moving the virtual machine from one VMware ESXi host to another.

Please see my blog post NVIDIA vGPU VM Freezes during VMware vMotion: vGPU STUN Time for more information.

“ERR!” State

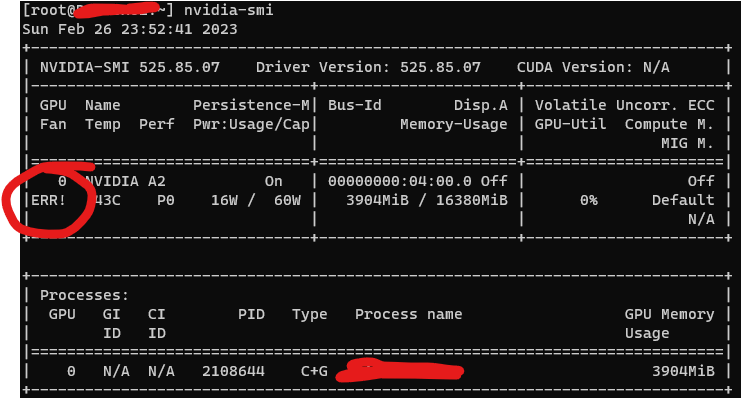

When experiencing issues, you may notice that “nvidia-smi” throws “ERR!” in the view. See the example below:

This is an indicator that you’re in a fault or error state, and would recommend checking the ESXi Host log files, and the Virtual Machine log files for XID codes to identify the problem.

vGPU Driver Mismatch

When vGPU is deployed, drivers are installed on the VMware ESXi host (vGPU Manager Driver), as well as the guest VM virtual machine (guest VM driver).

These two drivers must be compatible with each other. As per NVIDIA’s Documentation, see below for compatibility:

- NVIDIA vGPU Manager with guest VM drivers from the same release

- NVIDIA vGPU Manager with guest VM drivers from different releases within the same major release branch

- NVIDIA vGPU Manager from a later major release branch with guest VM drivers from the previous branch

Additionally, if you’re using the LTS (Long Term Support Branch), the additional compatibility note applies.

- NVIDIA vGPU Manager from a later long-term support branch with guest VM drivers from the previous long-term support branch

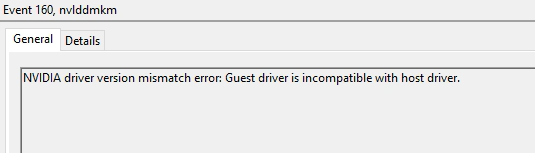

If you have a vGPU driver mismatch, you’ll likely see Event ID 160 from “nvlddmkm” reporting:

NVIDIA driver version mismatch error: Guest driver is incompatible with host drive.To resolve this, you’ll need to change drivers on the ESXi host and/or Guest VM to a supported combination.

Upgrading NVIDIA vGPU

When upgrading NVIDIA vGPU drivers on the host, you may experience issues or errors stating that the NVIDIA vGPU modules or services are loaded and in use, stopping your ability to upgrade.

Normally an upgrade would be preformed by placing the host in maintenance mode and running:

esxcli software vib update -d /vmfs/volumes/DATASTORE/Files/vGPU-15/NVD-VGPU-702_525.85.07-1OEM.702.0.0.17630552_21166599.zipHowever, this fails due to modules that are loaded and in use by the NVIDIA vGPU Manager Services.

Before attempting to upgrade (or uninstall and re-install), place the host in maintenance mode and run the following command:

/etc/init.d/nvdGpuMgmtDaemon stopThis should allow you to proceed with the upgrade and/or re-install.

VMware Horizon Black Screen

If you experiencing a blank or black screen when connecting to a VDI session with an NVIDIA vGPU on VMware Horizon, it may not even be related to the vGPU deployment.

To troubleshoot the VMware Horizon Black Screen, please review my guide on how to troubleshoot a VMware Horizon Blank Screen.

VM High CPU RDY (High CPU Ready)

CPU RDY (CPU Ready) is a state when a VM is ready and waiting to be scheduled on a physical host’s CPU. In more detail, the VM’s vCPUs are ready to be scheduled on the ESXi host’s pCPUs.

In rare cases, I have observed situations where VMs with a vGPU and high CPU RDY times, experience instability. I believe this is due to timing conflicts with the vGPU’s time slicing, and the VM’s CPU waiting to be scheduled.

To check VM CPU RDY, you can use one of the following methods:

- Run “esxtop” from the CLI using the console or SSH

- View the hosts performance stats on vCenter

- Select host, “Monitor”, “Advanced”, “Chart Options”, de-select all, select “Readiness Average %”

When viewing the CPU RDY time in a VDI environment, generally we’d like to see CPU RDY at 3 or lower. Anything higher than 3 may cause latency or user experience issues, or even vGPU issues at higher values.

For your server virtualization environment (non-VDI and no vGPU), CPU Ready times are not as big of a consideration.

vGPU Profiles Missing from VMware Horizon

When using newer GPUs with older versions of VMware Horizon, you may encounter an issue with non-persistent instant clones resulting in a provisioning error.

This is caused by missing vGPU Types or vGPU Profiles, and requires either downloading the latest definitions, or possibly creating your own.

For more information on this issue, please see my post NVIDIA A2 vGPU Profiles Missing from VMware Horizon causing provision failure.

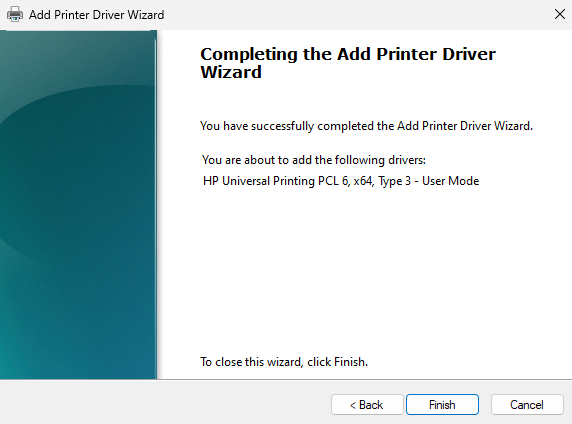

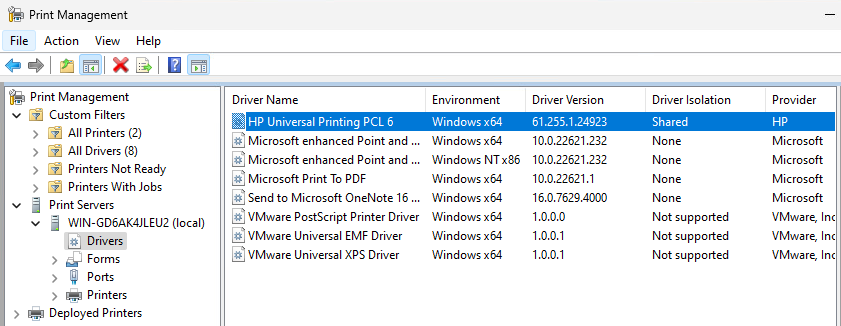

Issues with the VMware Horizon Indirect Display Driver

You may experience vGPU (and GPU) related issues when using certain applications due to the presence of the VMware Horizon Indirect Display Driver in the Virtual Machine. This is due to the application either querying the incorrect Display Adapter (VMware Indirect Display Driver), or due to lack of multi-display adapter support in the application.

The application, when detecting vGPU and/or GPU capabilities, may query the Indirect Display Adapter, instead of the vGPU in the VM. Resulting in failing to detect the vGPU and/or GPU capabilities.

To workaround this issue, uninstall the VMware Horizon Indirect Display Adapter from the Device Manager in the VM. Please note that if you simply disable it, the issue will still occur as the device must be uninstalled from the Device Manager.

Additionally, under normal circumstances you do not want to modify, change, or remove this display adapter. However this is only a workaround if you are experiencing this issue. Subsequent updates of the VMware Horizon agent will re-install this adapter.

For more information on this issue, please see GPU issues with the VMware Horizon Indirect Display Driver.

Additional (External) vGPU Links and Resources

Please see these these additional external links and resources which may assist.

- NVIDIA Virtual GPU (vGPU) Documentation – NVIDIA vGPU Documentation

- NVIDIA Virtual GPU Software Supported GPUs – List of GPUs supported by NVIDIA vGPU

- Virtual GPU Software User Guide – Troubleshooting – Latest Long-Term Support Branch Version 16