After you Deploy Remote Desktop Services (RDS) for employee remote access and Install Office 365 in a Remote Desktop Services Environment, your next step will be to configure it by deploying Group Policy Objects to configure Office 365 in a Remote Desktop Services Environment.

By deploying a Group Policy Objects to configure Office 365, you’ll be able to configure Office 365 for first time use, activate the product, roll out pre-defined configuration, and even automatically configure Outlook mail profiles.

Following these steps will help you provide a zero-configuration experience for your end users so that everything is up and running for them when they connect the first time. I will also provide a number of GPO settings which will enhance the user experience.

What’s Required

To Configure Microsoft Office 365 on a Remote Desktop Services Server, you’ll need:

- A Remote Desktop Services Server (Configured and Running)

- Microsoft 365 Apps for Enterprise (formerly named as Office 365 ProPlus)

- Office 365 Installed with SCA (Shared Computer Activation, as per “Install Office 365 in a Remote Desktop Services Environment“)

- Microsoft 365 Apps for Enterprise ADMX GPO Administrative Templates (Download here)

Shared Computer Activation

In order to properly configure and activate Office 365 in a Remote Desktop Services Environment, you will need to Install Office 365 with Shared Computer Activation. You can read my guide by clicking on the link.

Configure Office 365

Once you’re ready to go, you can begin configuration.

To make things as simple as possible and centrally manage every aspect of your O365 deployment, we want to configure everything via GPO (Group Policy Objects). This will allow us to configure everything including “first run configuration” and roll out a standardized configuration to users.

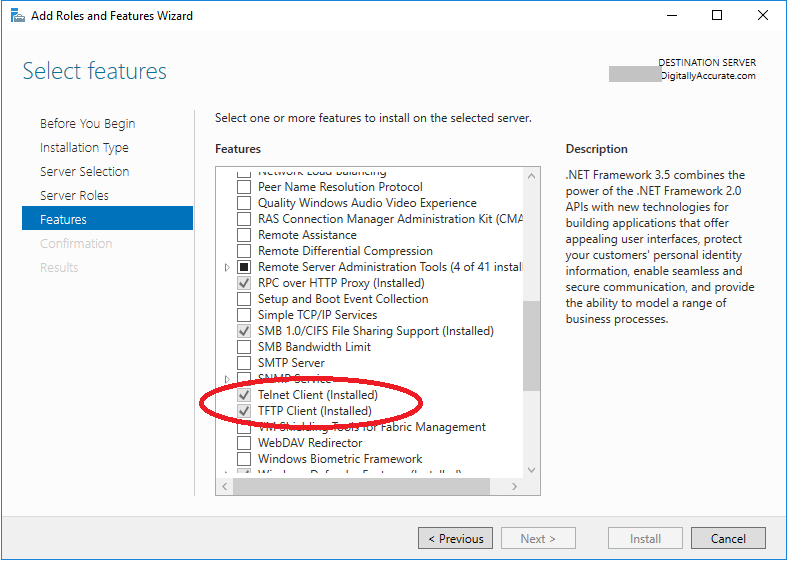

In order to modify GPOs, you’ll need to either launch the Group Policy Management MMC from a domain controller, or Install RSAT (Remote Server Administration Tools) on Windows 10 to use the MMC from your local computer or workstation.

You’ll probably want to create an OU (Organizational Unit) inside of Active Directory for your RDS farm, and then create a new Group Policy Object and apply it to that OU. In that new GPO, we’ll be configuring the following:

We’ll be configuring the following “Computer Configuration” items:

- Microsoft Office – Licensing Configuration

- Microsoft Office – Update Configuration

- Microsoft OneDrive – Known Folders, Use OneDrive Files On-Demand

- Windows – Group Policy Loopback Processing Mode

We’ll also be configuring the following “User Configuration” items:

- Microsoft Office – First Run Configuration

- Microsoft Office – Block Personal Microsoft Account Sign-in

- Microsoft Office – Subscription/Licensing Activation

- Microsoft Outlook – Disable E-Mail Account Configuration

- Microsoft Outlook – Exchange account profile configuration

- Microsoft Outlook – Disable Cached Exchange Mode

Let’s start!

Microsoft Office – Licensing Configuration

Since we’re using SCA (Shared Computer Activation) for licensing, we need to specify where to store the users activation tokens. You may have configured a special location for these, or may just store them with your user profiles.

First we need to activate Shared Computer Activation. Navigate to:

Computer Configuration -> Policies -> Administrative Templates -> Microsoft Office 2016 (Machine) -> Licensing SettingsAnd set “Use shared computer activation” to Enabled.

Next we’ll set “Specify the location to save the licensing token used by shared computer activation” to the location where you’d like to store the activation tokens. As an example, to store to the User Profile share, I’d use the following:

\\PROFILE-SERVER\UserProfiles$\%USERNAME%Microsoft Office – Update Configuration

Because this is a Remote Desktop Services server, we want automatic updating disabled since IT will manage the updates.

We’ll want to disable updated by navigating to:

Computer Configuration -> Policies -> Administrative Templates -> Microsoft Office 2016 (Machine) -> UpdatesAnd set “Enable Automatic Updates” to Disabled.

We’ll also set “Hide option to enable or disable updates” to Enabled to hide it from the users.

Microsoft OneDrive – Known Folders, Use OneDrive Files On-Demand

There’s some basic configuration for OneDrive that we’ll want to configure as we don’t want our users profile folders being copied or redirected to OneDrive, and we also want OneDrive to be used with Files On-Demand so that users OneDrive contents aren’t cached/copied to the RDS Server.

We’ll navigate over to:

Computer Configuration -> Policies -> Administrative Templates -> OneDriveAnd set the following GPO objects:

- “Prevent users from moving their Windows known folders to OneDrive” to Enabled

- “Prevent users from redirecting their Windows known folders to their PC” to Enabled

- “Prompt users to move Windows known folders to OneDrive” to Disabled

- “Use OneDrive Files On-Demand” to Enabled

We’ve new configured OneDrive for RDS Users.

Windows – Group Policy Loopback Processing Mode

Since we’ll be applying the above “Computer Configuration” GPO settings to users when they log on to the RDS Server, we’ll need to activate Loopback Processing of Group Policy (click the link for more information). This will allow use to have the “Computer Configuration” applied during User Logon and have higher precedence over their existing User Settings.

We’ll navigate to the following:

Computer Configuration -> Policies -> Administrative Templates -> System -> Group PolicyAnd set “Configure user Group Policy loopback processing mode” to Enabled, and “Mode” to Merge.

Microsoft Office – First Run Configuration

As most of you know, when running Microsoft Office 365 for the first time, there are numerous windows, movies, and wizards for the first time run. We want to disable all of this so it appears that Office is pre-configured to the user, this will allow them to just log on and start working.

We’ll head over to:

User Configuration -> Policies -> Administrative Templates -> Microsoft Office 2016 -> First RunAnd set the following items:

- “Disable First Run Movie” to Enabled

- “Disable Office First Run on application boot” to Enabled

Microsoft Office – Block Personal Microsoft Account Sign-in

Since we’re paying for and want the user to use their Microsoft 365 account and not their personal, we’ll stop them from being able to add personal Microsoft Accounts to Office 365.

Head over to:

User Configuration -> Policies -> Administrative Templates -> Microsoft Office 2016 -> MiscellaneousAnd set “Block signing into Office” to Enabled, and then set the additional option to “Organization ID only”

Microsoft Office – Subscription/Licensing Activation

Earlier in the post we configured Office 365 to use SCA, now we’ll need to configure how it’s activated. We don’t want the activation window being shown to the user, nor the requirement for it to be configured, so we’ll configure Office 365 to automatically active using SSO (Single Sign On).

Navigate to:

User Configuration -> Policies -> Administrative Templates -> Microsoft Office 2016 -> Subscription ActivationAnd then set “Automatically activate Office with federated organization credentials” to Enabled.

Microsoft Outlook – Disable E-Mail Account Configuration

We’ll be configuring the e-mail profiles for the users so that no initial configuration will be needed. Again, just another step to let them log in and get to work right away.

Inside of:

User Configuration -> Policies -> Administrative Templates -> Microsoft Outlook 2016 -> Account Settings -> E-mailAnd we’ll set the following:

- “Prevent Office 365 E-mail accounts from being configured within a simplified Interface” to Disabled

- “Prevent Outlook from interacting with the account settings detection service” to Enabled

Microsoft Outlook – Exchange account profile configuration

We’ll want your users Outlook Profile to be auto-configured for their Exchange account so we’ll need to configure the following setting.

Navigate to:

User Configuration -> Policies -> Administrative Templates -> Microsoft Outlook 2016 -> Account Settings -> ExchangeAnd set “Automatically configure profile based on Active Directory Primary SMTP address” to Enabled.

After setting this, it will automatically add the Exchange Account when they open Outlook and they’ll be ready to go! Note, that there is an additional setting with a similar name appended with “One time Only”. Using the One time Only will not try to apply the configuration on all subsequent Outlook runs.

Microsoft Outlook – Disable Cached Exchange Mode

Since we’ll have numerous users using the RDS server or servers, we don’t want users cached Outlook mailboxes (OST files) stored on the RDS server. We can stop this by disabling Exchange caching.

Navigate to:

User Configuration -> Policies -> Administrative Templates -> Microsoft Outlook 2016 -> Account Settings -> Exchange -> Cached Exchange ModeAnd we’ll set the two following settings:

- “Cached Exchange Mode (File | Cached Exchange Mode)” to Disabled

- “Use Cached Exchange Mode for new and existing Outlook profiles” to Disabled