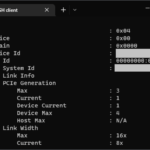

If you’re like me, you want to make sure that your environment is as optimized as possible. I recently noticed that my NVIDIA A2 vGPU was reporting the vGPU PCIe Link Speed and Generation that the card was using was below what it was supposed to be running at on my VMware vSphere ESXi host.

I needed to find out if this was being reported incorrectly, if there was an issue, or something else effecting this. In my case, the specific GPU I was using is supposed to support PCIe Gen4, and has a physical connector supporting 4x, my host has PCIe Gen3 slots, so I should at least be getting Gen3 speeds.

The Problem

When running the command “nvidia-smi -q”, the GPU was reporting that it was only running at PCIe Gen 1 speeds, as shown below:

GPU Link Info

PCIe Generation

Max : 3

Current : 1

Device Current : 1

Device Max : 4

Host Max : N/A

Link Width

Max : 16x

Current : 8xSomething else worth noting, is that the card states that it supports a 16x interface, when it actually only physical has a 8x connector. I believe they use this chip on another board that has multiple GPUs on a single board that actually supports 16x.

You could say I was quite puzzled. Why would the card only be running at PCIe Generation 1 speeds? I thought it could be any of the scenarios below:

- Dynamic mode that alternates when required (possibly for power savings)

- Hardware issue

- Hardware Limitation (I’m using this in an older server)

- Software issues

- Configuration issue

Unfortunately, when searching the internet, I couldn’t find many references to this metric, however I did find references from other user’s copy/pastes of “nvidia-smi -q” which had the same values (running PCIe Gen1), even with beefier and more high-end cards.

The Solution

After some more searching, I finally came across an NVIDIA technical document titled “Useful nvidia-smi Queries” that states that the current PCIe Generation Link speed “may be reduced when the GPU is not in use”. This confirms that it’s dynamic and adjusts when needed.

Finally, I decided to give some games a shot in a couple of the VMs, and to my surprise when running a game, the “Device Current” and “Current” PCIe Generation changed to PCIe Gen3 (note that my server isn’t capable of PCIe Gen4, which is the cards maximum), as shown below:

GPU Link Info

PCIe Generation

Max : 3

Current : 3

Device Current : 3

Device Max : 4

Host Max : N/A

Link Width

Max : 16x

Current : 8xIn conclusion, if you notice this in your environment, do not be alarmed as this is completely normal and expected behavior.