I’ve had the HPE MSA 2040 setup, configured, and running for about a week now. Thankfully this weekend I had some time to hit some benchmarks. Let’s take a look at the HPE MSA 2040 benchmarks on read, write, and IOPS.

First some info on the setup:

-2 X HPE Proliant DL360p Gen8 Servers (2 X 10 Core processors each, 128GB RAM each)

-HPE MSA 2040 Dual Controller – Configured for iSCSI

-HPE MSA 2040 is equipped with 24 X 900GB SAS Dual Port Enterprise Drives

-Each host is directly attached via 2 X 10Gb DAC cables (Each server has 1 DAC cable going to controller A, and Each server has 1 DAC cable going to controller B)

-2 vDisks are configured, each owned by a separate controller

-Disks 1-12 configured as RAID 5 owned by Controller A (512K Chunk Size Set)

-Disks 13-24 configured as RAID 5 owned by Controller B (512K Chunk Size Set)

-While round robin is configured, only one optimized path exists (only one path is being used) for each host to the datastore I tested

-Utilized “VMWare I/O Analyzer” (https://labs.vmware.com/flings/io-analyzer) which uses IOMeter for testing

-Running 2 “VMWare I/O Analyzer” VMs as worker processes. Both workers are testing at the same time, testing the same datastore.

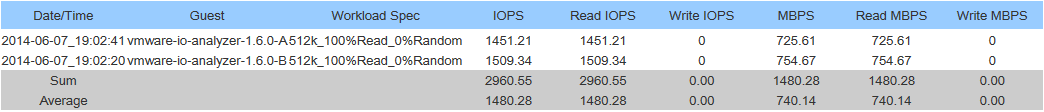

Sequential Read Speed:

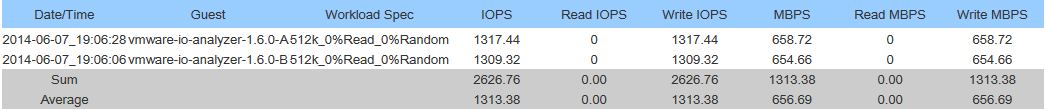

Sequential Write Speed:

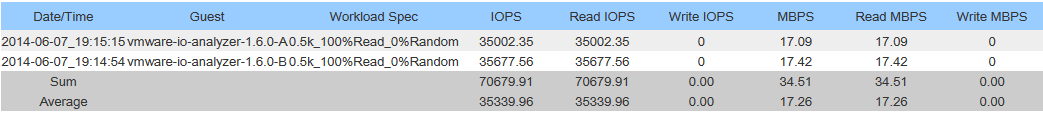

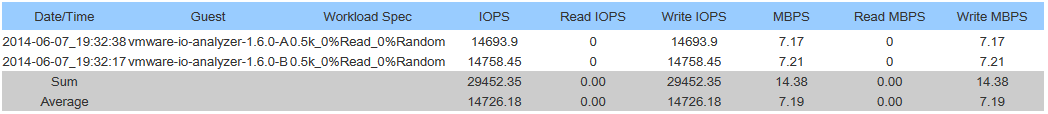

See below for IOPS (Max Throughput) testing:

Please note: The MaxIOPS and MaxWriteIOPS workloads were used. These workloads don’t have any randomness, so I’m assuming the cache module answered all the I/O requests, however I could be wrong. Tests were run for 120 seconds. What this means is that this is more of a test of what the controller is capable of handling itself over a single 10Gb link from the controller to the host.

IOPS Read Testing:

IOPS Write Testing:

PLEASE NOTE:

-These benchmarks were done by 2 seperate worker processes (1 running on each ESXi host) accessing the same datastore.

-I was running a VMWare vDP replication in the background (My bad, I know…).

-Sum is combined throughput of both hosts, Average is per host throughput.

Conclusion:

Holy crap this is fast! I’m betting the speed limit I’m hitting is the 10Gb interface. I need to get some more paths setup to the SAN!

Cheers

Hey Stephen, fun to read. I’m waiting for my MSA 2040 with 24x900GB 10k SAS arrives. I choose for the 12Gb SAS controllers, I can’t wait to run some tests on that one. I will let you know 🙂

Greetings, Lars

Hi Stephen and Lars,

just did the same benchmarks !

MSA2040 12GB SAS – 24x 600GB 10k

Datastore 1:

RAID5 – 7 spindles 600GB 10k

Combined speeds:

Read 100% – 1733

Write 100% – 1856

Max IOPS – 39633

Max WR IOPS – 33517

Datastore 2:

RAID 10 – 16 spindles 600GB 10k

Combined speeds:

Read 100% – 5228

Write 100% – 2824

Max IOPS – 92399

Max WR IOPS – 33338

cheers, Mark

Glad to hear Mark!

I’m still absolutely loving the HP MSA 2040. Great investment on my side of things!

Cheers,

Stephen

Hi Stephen,

I’m curious, what cable you are using to direct attach the SAN? I am using 2 X X242 DAC cables (J9285B). I have set the controllers to iSCSI.

I have an MSA 2040 (C8R15A) and am using an NC550SFP adapter to connect to the two controllers i.e. a dual port card connected to the first port on each controller. My controllers complain that I am using an unsupported SFP, Error 464.

Any more info you can provide about your hardware config would be appreciated, I’m not getting any definitive response from HP.

Thanks.

J

Hi Julian,

I’m using 4 x 487655-B21 cables. I’m noticing that both of our cables are listed inside of the HP Quickspecs document for the MSA 2040.

I know that it’s supposed to be a requirement to purchase a 4-pack SFP+ transceiver. In my case, I chose the 4 X 1Gb RJ-45 modules (even though I’m not using them, nor have anything plugged in to them). Did you also do this?

Also, do you have the firmware on your unit fully up to date?

Just for the record, I’m using 2 X 700760-B21 (10Gb NICs) for both of my servers.

I’m wondering if it’s the number of cables you’re using. Have you thought about using 4 cables? Maybe it’s a weird requirement to have a minimum of 4. For the sake of troubleshooting, can you plug both cables in to controller A to see if it changes the behavior?

Please refer to my other blog post at: http://www.stephenwagner.com/?p=791 for more information on my setup.

Stephen

Stephen,

I didn’t purchase a 4-pack, but I didn’t buy a bundle either as none were in stock. My unit was put together, bit by bit, so maybe the 4 transceiver minimum was not mandatory.

I only have the first port in each unit filled with the DAC cables. HP asked me to do the same thing you are and I will do so tomorrow. I have two more cables and may try to plug one in to see if it makes a difference.

Will let you know.

J

I’m not sure whether the act of plugging in another DAC cable into controller 1 port 2 eliminated the warning message or rebooting both controllers did it, but the warning is gone.

J

Hi Julian,

Glad to hear you resolved the issue. Let us know if you end up finding out if it was the second cable or not. It’s good to know that the 4-pack of transceivers isn’t needed (as I didn’t test this).

Is there any chance that there was a pending firmware update on the unit?

Stephen

curious….can anyone post numbers for more randomized workloads?

Hi Stephen,

Thanks for the results, I’m looking for some comparison with my test VSAN setup I’m going through at the moment.

Did you use the standard disk sizes that come with VMware IO Analyzer? As standard it writes to a 100mb vmdk, when I increase this to 100gb my iops figures drop like a lead balloon.

Thanks in advance, i’ll post my stats here.

Hi,

just done same test on my MSA2040 SAS (with new GL200 firmware & performance tiering option).

1 Virtual pool / controller.

Pool A :

RAID 1 2x SSD 400Go

RAID 5 10x 600Go 10k

Pool B

RAID 1 2 x SSD 400Go

RAID 5 9 x 600Go 10k

2 separate workers. One on each esx, each one accessing one of the 2 datastores

esx-MSA connection with SAS 6Gb (but limited to 4Go due to the pci 2.0 server ports).

==>

Max IOPS

.0.5k_100%Read_0%Random 73929.6

0.5k_100%Read_0%Random 70168.72

SUM 144098.32

Max Write IOPS

Workload Spec Write IOPS

0.5k_0%Read_0%Random 29555.92

0.5k_0%Read_0%Random 29199.32

58755.24

Max read speed :

Workload Spec Read MBPS

512k_100%Read_0%Random 1300.56

512k_100%Read_0%Random 1731.45

3032.01

Max write speed :

Workload Spec Write MBPS

512k_0%Read_0%Random 2135.21

512k_0%Read_0%Random 1983.35

4118.56

I made the test with 100Mb iometer disks, then 16Gb iometer disks, same results.

all ios where provided by the SSDs.

I’m pretty sure the SSDs are able to provide more iops,

With SSDS, the MSA2040 bottleneck is clearly the controller CPUs (single core intel gladden celeron 725C). that where at 98-99% during the tests.

That’s an amazing price/performance ratio.

I’m disappointed by the stats part. We Only got 15mn samples, and no recording of controllers cpu usage.

HI Stephen

I have stumbled across this page a few times trying to get my cluster performance up and found it a very good read. however your MTU settings on the MSA are wrong. The MSA only supports Packets upto 8900.

PG 36

http://h20195.www2.hp.com/v2/GetPDF.aspx/4AA4-6892ENW.pdf

Hi Stephen,

I just checked that document and there was no page 36… Also, I searched jumbo frames and that document mentioned an MTU of 9000 being the maximum for Jumbo Frames.

Did you provide the right document?

I’ll check it out, but in my initial configuration of the unit, the documents I references mentioned MTU’s of 9000 which the unit accepted.

Stephen

Yeah I just clicked on the link and it took me to the right doco, the second last page says

Jumbo frames

A normal Ethernet frame can contain 1500 bytes whereas a jumbo frame can contain a maximum of 9000 bytes for larger data transfers. The MSA reserves some of this frame size; the current maximum frame size is 1400 for a normal frame and 8900 for a jumbo frame. This frame maximum can change without notification. If you are using jumbo frames, make sure to enable jumbo frames on all network components in the data path.

Hi Stephen,

Yes, that is correct. When setting the Jumbo max packet size on devices, any settings done by the user is usually always the max size (which includes overhead).

The max setting on the MSA is 9000 (even though only 8900 may be data, the rest is overhead). All devices connected are set to a max of 9000.

Sorry Stephen,

Just to clarify, the usual over head for an MTU packet is 28 so if I set my MTU to 9000 it will senda packet of 8972 bytes which is still over the 8900MTU size set on the MSA. So wouldn’t it be prudent to sent the max size on the host to 8900?

Hi Stephen,

I think the HP document is also including iSCSI overhead in to the mix as well.

Whenever setting MTUs on Storage devices and servers, the usual setting itself represents the entire packet MTU, they never expect users to subtract overheads as well (unless the setting advises to do so). This is strictly a network MTU setting, not anything specific on the software layer beneath networking.

There’s numerous other HP documents out there outlining VMWare best practices configuration for the MSA 2040, along with some other documents that specify setting the value having a configuration maximum of 9,000.

Typically when the term “Jumbo Frame” is used, it typically implies a value of 9,000.

I can see what you’re getting at, but I can confidently say that the max value for that setting is 9,000. And whatever setting you do choose, you need to mirror on all hosts, devices, and network devices between your MSA and your hosts.

If you feel confident in using a lower value, just make sure you set it on your hosts as well.

If you wanted too, try setting a value higher than 9,000. I think it’ll either not accept the value, or bring it down to an acceptable value.

Hi Stephen,

Good day to you!

Would like to ask you something on MSA 2040 SAS;

I have 3 x DL360 Gen9 with H241 running at 12G (Direct connect to MSA 2040 SAS)

Another is DL360 Gen8 with H221 running at 6G (Direct connect to MSA 2040 SAS)

Although individual ports on adapters will be talking to MSA SAS and speed will depend on individual supported speed; 12G for H241 and 6G for H221;

My question is, with a DL360 Gen8 with 6G link, overall, will it affect the performance of the entire setup?

I believe it will not as the link are independent links..

Am I correct?

Thank you in advance Stephen!

Regards,

Mufi

Wil

Hi Mufi,

I can’t say for sure (since I haven’t used the SAS version of the MSA 2040), I scanned through the documentation and couldn’t find anything, however I would assume that they should operate at different speeds, and that the slower 6G link should NOT effect the 12G link…

Cheers,

Stephen

Hi Stephen,

Thank you for your reply, It is much appreciated;

I emailed one of the Storage guys in HPE and i got this following reply;

“The MSA 2040 SAS array is a 12Gb capable controller so to the 12G H241 direct connected to the array the connection will be @ 12G. For the 6G H221 the connection will be @ 6G.

This does not necessarily mean that the H241 will be faster than the H221. Only that the speed of transactions between the array and the HBA will be faster.

Each mini-SAS HD host port on the MSA 2040 SAS controller can/will independently negotiate speed (12G/6G) so connecting an H241 on port 3 and an H221 on port 4 will not cause a change in connection speed of the H241. Again connection speed and performance are not 100% related. Connection speed can give you the ability of greater performance but there are dozens of different parameters which will also affect performance.”

Just sharing some knowledge 🙂

Thank you once again Stephen,

Regards,

Mufi

Hi Mufi,

Thanks for following up and posting that update. It’s good you posted it as I’m sure that numerous other people have or will have the exact same question!

Cheers,

Stephen

MSA 1040 and 2040 has a Jumbo MTU of 8900.

If you have any doubt try:

vmkping -d -s 8972

You will only receive ping response with a -s 8872 which is a MTU of 8900

Regards

Nicolas

Thanks Nicolas,

I can confirm that. Thank you very much!

I just tested using that command and confirmed that the MTU on the MSA2040 is NOT 9000. I’m very shocked considering this should be more well and clearly documented in the HPe documentation (certain documents conflict other documents).

I’ve updated my configuration and am already experiencing a massive performance increase (no doubt from clearing up the fragmented packets).

Hi, do you think 6 SSD’s in a RAID 5 is too much IOPS for one 12G controller to handle (I’m using SAS connections)? Would it be better to use two RAID 5’s with 3 SSD’s … one assigned to each controller? Thank you.

Hi Keith,

I’d need to know more specifics as to the workloads, data, and types of services/data being hosted on these arrays to offer any type of recommendation.

I can’t recall the max the controllers can do, but I think they should be able to keep up with both situations.

Ultimately, by using two controllers, you can split the workloads across them, however you’ll lose some storage on the parity for the second array (-1 disk), but you will gain some more redundancy by having 2 arrays vs a single one, so this would be another consideration.

You could always do some testing to if you don’t need to go live with the setup immediately.

If you can post more details, I’ll try to offer some more specific advice.

I’m not using flash based storage, but I chose to split my 24 disks in to 2 arrays, so that both controllers would be optimized and also add some safety in the event a single array failed.

Stephen

Thank you for the response. This MSA will be dedicated to VMware and will mostly be a dev and lab cluster and I’m not 100% sure of the total workload yet, but I suspect it won’t be super intense. I was just trying plan ahead as much as I can.

I thought about also just using two traditional linear volumes (one SSD RAID 5 and one HDD RAID 10), but virtual pool would probably be the way to go, although I guess I can switch over later if I need to . I can’t find much information regarding how much VMware benefits from sub-lun / performance tiering.

Hi Keith,

If it’s for dev/lab cluster, I’d go for the two array option. That way you can play with Storage vMotion if you’re licensed, and even without storage vMotion it’ll give you flexibility to arrange your workloads the way you’d like.

Load up the first array in bays 1-3, and the second in 13-15. This way you’ll be able to add/expand with more disks in the future if/when needed.

I’m not too familiar with the virtual pool/new features as I only use linear groups in my own environment. I literally spent 10 minutes setting up my array back when I got it, and it’s been sitting and working non-stop since, haha. Haven’t had the need to try any of the new features.

Cheers!

we just received our msa2040 sff dc. have it setup with 1.2tb 12g drives in raid10 and 8 iscsi sfp transceivers. (why? because we didn’t want to invest yet in two 10ge switches and dual cards for 6 servers)

the numbers of iops you show are very similar to what we receive as well (after the mtu was changed might i add). so far i’m very impressed with the san, however, i have one question which i haven’t been able to answer.

what’s the difference performance wise as well as application wise between linear and vdisk volumes?

neither the best practice guide for the msa2040 and vmware nor the user guide have explained this.

Hi Tal,

Thanks for writing, and that’s a very good question. Just for the record, in my case I’m using linear disk storage.

While I’ve never used the virtualized storage features on the MSA 2040, I believe depending on your setup you can get better performance using the virtualized storage features, if you have multiple different workload types, as well as different disk types. When rolling out you’re MSA deployment, you’ll need to plan your deployment accordingly, and purchase the disk types accordingly.

Technically, if you configured tiered storage, the MSA would automatically put the high (hot) workloads on the higher performance tier storage types, and the “cold” storage on the slower storage types. If you have it configured properly it is all automated, it also enables other features that I won’t get in to (you can read this on the MSA 2040 best practices document).

You can also configure and dedicate SSD disks for additional read cache, or use them for the highest performance tier.

In my scenario, I purchased my MSA 2040 back before they had the virtualized storage features, so I purchased 24 X 900GB SAS drives and configured it linear. Since then I haven’t had the need to bother with it, so I’ve just left it that way.

If the feature was available at the time I did my configuration, I probably would have purchased both SAS and SSD disks, and configured the virtualized storage features along with configuring performance tiers. This would have allowed all my intensive I/O applications and VMs to utilize the faster storage types, and placed all my idle and low I/O applications on the slower disks.

I could be wrong, but I think you can also configure different RAID types on the different disk groups when using the virtualized storage features. This means you can configure the performance types based on the different RAID levels.

There probably is additional features in using the virtualized storage system that you can read up on in the “MSA 2040 Best Practices” document. It’s all up to you and how you plan your deployment to use them or not.

Perhaps in the future when I need to upgrade, or when I have some time I’ll reconfigure everything from scratch and get the virtualized storage features configured.

Let me know if you have any additional questions!

Cheers,

Stephen

Keith, I hope you get a chance to read this update:

Since you’re post I’ve been doing quite a bit of reading and research on linear vs virtual. I actually finally took the plunge in my own environment, migrated data and killed my linear volumes and provisioned new virtual disk groups.

As far as virtual vs linear, I don’t see any performance decrease, just a whole bunch of awesome new features.

In your environment, I would now actually recommend to create a couple arrays and fully utilize the performance tiering (if you have the performance tier licensing for your MSA).

I would recommend creating 2 arrays per controller (if you have one controller, create two arrays, if you have 2 controllers, create 4 arrays).

I would recommend creating both an SSD disk pool, and SAS disk pool (you can choose the RAID levels as required), for each independent controller. Configure the performance tier on the SSD disk group, and the standard SAS on your SAS disk group. Then go ahead and create the volumes and configure performance tiering.

Let me know how you make out if you try this!

Unfortunately I have no SSD’s in my environment and I don’t have a performance tiering license so I can’t test any of that stuff out.

Cheers,

Stephen

Hi Stephen, I have an MSA 2042 coming soon and was wondering if you could answer this. According to best practices from HPE, I should try to distribute arrays evenly between controllers but what if I want just one big volume? I was going to create multiple 5 disks RAID5 arrays and create a big volume on those…is that OK? I would use the SSD as ReadCache, or maybe I’d be better using them for tiering? I will only have 2 400GB SSDs.

Thanks

Hi berserk,

If you just want one big volume, you wouldn’t be able to distribute them amongst the controllers. It’s essentially your choice.

If you did create them evenly over both controllers, this helps with distributing the load across controllers as you’ll be utilizing both controllers.

Whichever option you choose, both will still provide redundancy in the event of a controller failure (as long as you have everything configured properly, and hosts have access to both controllers).

As for your question about Readcache vs tiering, it all depends on your workload type, and what you’re using the array for. If you go with performance tiering, you’d want to make sure that your “hot” data would be able to fit on to the SSD performance tier.

I hope this helps!

Cheers,

Stephen

I have a similar set up:

MSA 1040 10GB controllers, 12x 900GB 10k SAS

Direct connected via SFP to

HP DL380 G8 configured for multipathing to the same LUN. These are VMWare hosts that I’m configuring to be able to fail over VMs in case of a host failure. Both physical servers see the same 8+1 RAID5 volume.

I am seeing extremely slow performance. My VMs take forever to come up. I’ve enabled jumbo frames, set my VMWare MTU settings to 8872 per some suggestions above. Still nothing seems to matter.

On the opening screen of the MSA1040 when I mouse over the controller ports, each say connected at 10GB. However it’s saying between them the IOPs of 200-300, and 5-8mb/s. It’s pitiful. I’m really struggling to understand this.

Any help understanding why I’m not seeing crazy fast speeds would be greatly appreciated.

Hi Jason,

Sorry for the delay in responding…

I’m just curious, what multipath configuration are you using? Have you enabled round-robin? Also, do the active paths inside of the vSphere configuration show as valid (do the paths listed on the interface, reflect the actual paths that exist)?

Are you using multiple subnets for your configuration? If you are/aren’t, do you have iSCSI port binding configured?

Also, did you start your LUN numbering as 1 on the SAN (0 is used by the controllers)?

Cheers,

Stephen

Hi Stephen,

as I’m thinking about getting myself one msa 2050/52 I’m really happy with your msa 2040 posts. Plus big kudos to you for answering user comments. ;-).

Most of my data will be hyperv VM with sql server databases from 10gig to 4TB so i’m thinking about this setup:

Controler A) [10×1800 sas 10K (RAID10) TIER1 + 2×600/800 SSD (RAID1) TIER0] POOL1

Controler B) [10×1800 sas 10K (RAID10) TIER1 + 2×600/800 SSD (RAID1) TIER0] POOL2

Any chance of hammering your san for a little longer than 120s? Or with bigger data? So we could check how long the controller cache can keep up and what happens when it can’t 😉 ( any chance of using fio/diskspd from VM?)

Do you still use the same raid configuration? Have you had any problems with stability or performance since you deployed msa? Any tips for a future users?

Sorry for the lots of questions, but this will be my first san, so i’m a little bit anxious;-)

Thanks,

Dawid

Hi Dawid,

Thanks for the kind words!

I am still running the same configuration and same RAID levels. Still 100% happy with the entire setup and configuration. I’m still very impressed with the MSA line of SANs and I still recommend them!

Unfortunately I can’t do any more testing with my system, since it’s all production, but I do have a couple notes/comments on performance which I’ll put below.

Originally when I first purchased it, when doing a benchmark once the unit did lock up. I believe this had to do with the early firmware versions and a combination of the RAID level, etc… In the first couple firmware versions there were some serious bugs that got patched up.

Since the early firmware updates, I’ve been able to max the unit out (max the disks of course since I’m not using SSD), and I’ve never had any lockups or issues. On numerous occasions I’ve had it maxed with random type I/O (database transactions) and massive upgrades for 24 hours at a time. It works great!

Just as an example, on my MSA 2040 SAN, my sister company is running multiple SAP instances (ECC6 EHP7 Fiori/SAP UI 5 apps) including Solution Manager, Business Suite 2013, and IDES (International Demonstration and Education System), which we use for testing and development. The SAN handles it flawlessly, and rips through the DB transactions like they were nothing! What’s even better is that this workload has no effect on my own workloads that are running on the same LUNs (everyone’s happy, lol).

As I mentioned in the first paragraph, I’d recommend the MSA line of SANs to everyone, you won’t regret it.

As for tips to future users: Read ALL the documentation before deploying to production, maybe even play around with the unit at first to learn configuration, etc… Take advantage of the performance tiering (like you mentioned). Get comfortable with it first before rolling it out!

I hope this helps!

Cheers,

Stephen

i have nearly the same setup as you except my hp server 380dl gen8 and i have a hp 2920 switch in between the hpst and san 2040. but i only get around 55MB/s for years and its driving me crazy i thought it was slow but seeing your speeds today confirm to me something is wrong. i dont know who can help me configure the settings correctly theres so many things to check everywhere, vmware, hp switch, san settings. shoot me now

Hi Sulayman,

Are you running 10Gb on the networking? Are you using jumbo frames, and have you configured MPIO properly?

Also, did you use the VMware best practices guide from HPe when doing the initial configuration?

Stephen

i didnt set it up so im not sure

we are using 10gb between SAN and 2920 switch (rear 10gb module) then on the front of the 2920 switch we have bunched 4x1GB cat5 and called it DATA network which all the 9x VM share this network connection. even on holiday periods when all VM are resting the speeds never exceed 50MB/s or 1555iops reported on the san interface.

i believe the 2920 switch is using jumbo frames or at least ive seen some references to jumbo frames somewhere but i should check exactly where it says this. and the DATA vmware network is using jumbo.

MPIO im not sure what to check all i know it means multipath lol

i can export the 2920 swtich config and take screenshots from san and vmware if that is helpful

Hi Sulayman,

It sounds like you’ll need to do some investigation in to the SAN config, Network Config, subnets, MPIO, and other things.

It could be a number of different reasons why your SAN is performing so poorly: From network, to disks, to SAN, to config, to MPIO config, etc… You’ll also need to check your VMware config as well to make sure the subnetting is correct, LACP isn’t being used (because you need MPIO), and more.

If you want me to investigate and work on this for you, please see https://www.stephenwagner.com/hire-stephen-wagner-it-services/ .

Thanks,

Stephen

It seems you only tested the storage cache. A little bit of simple math will help you calculate the real IOPS in a real environment. One 900GB disk will give you 150 IOPS. 24 disks will give you 24*150=3600 IOPS in the best case. Maybe 4000-5000 operations per second at best. The 70,000 transactions shown are not even close. In a real production environment, the cache is useful only when writing. The chance of hitting the cache with RAID5 will be roughly 32GB/18000GB, i.e. about 0.0017 or 0.2%, which will not provide any benefit and will not increase the speed in any way.