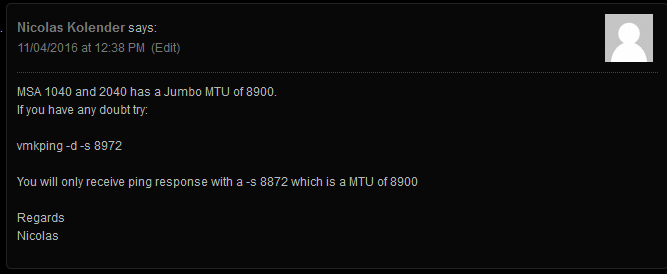

Yesterday, I had a reader (Nicolas) leave a comment on one of my previous blog posts bringing my attention to the MTU for Jumbo Frames on the HPE MSA 2040 SAN.

Since I first started working with the MSA 2040. Looking at numerous HPE documents outlining configuration and best practices, the documents did confirm that the unit supported Jumbo Frames. However, the documentation on the MTU was never clearly stated and can be confusing. I was under the assumption that the unit supported 9000 MTU, while reserving 100 bytes for overhead. This is not necessarily the case.

Nicolas chimed in and provided details on his tests which confirmed the HPE MSA 2040 does actually have a working MTU of 8900. In my configuration I did the tests (that Nicolas outlined), and confirmed that the MTU would cause packet fragmentation if the MTU was greater than 8900.

ESXi vmkping usage: https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1003728

This is a big discovery because packet fragmentation will not only degrade performance, but flood the links with lots of packet fragmentation.

I went ahead and re-configured my ESXi hosts to use an MTU of 8900 on the network used with my SAN. This immediately created a MASSIVE performance increase (both speed, and IOPS). I highly recommend that users of the MSA 2040 SAN confirm this on their own, and update the MTUs as they see fit.

Also, this brings up another consideration. Ideally, on a single network, you want all devices to be running the same MTU. If your MSA 2040 SAN is on a storage network with other SAN devices (or any other device), you may want to configure all of them to use the MTU of 8900 if possible (and of course, don’t forget your servers).

A big thank you to Nicolas for pointing this out!

very interesting post, but is this really a problem ? Within the tcp-handshake the MSA is offering the reduced MSS of 8860 bytes, so there should be no fragmentation at all.

Hi Deniz,

As far as I know, MSS is the maximum segment size of a single TCP segment regardless if it’s a single packet, or fragmented packets. Technically (again AFAIK) the packets can be fragmented, and the MSS won’t make a difference, as it’s being allowed over fragmented packets. From my understanding the MSS is the max segment size on the final reconstructed packet (reconstruction after fragmentation).

I like to have all my configurations set up “best practice”, so with this in mind, I configure the MTU’s on the IP layer to make sure no packet fragmentation should occur (since the MSA has a lower Jumbo Frame MTU than 9000).

If someone can correct me or expand on this, it would be appreciated.

Can you confirm where is esx have you made the changes to the MTU? I have performed this and my esx hosts will not recognise the MSA2040 storage after a reboot. I changed the MTU on the virtual switch and each nic connected via iSCSI.

Hi Matthew,

If you’re changing it on a Distributed Virtual switch, you need to make the changes first to the vDs on the vCenter server, then on ALL your hosts on the host’s distributed vswitch associated with the iSCSI storage, and you need to change it vmk interfaces as well that are associated with the iSCSI storage connection.

On normal Virtual Switches, you’ll need to make the change on ALL your hosts to the virtual switches that are being used by iSCSI, and all the vmk interfaces used by the iSCSI storage.

I recommend having no virtual machines powered up while you do this.

Just wanted to say you are AWESOME for this article. Was running through trying to figure out why the command would drop the packets after a certain point until I found the same exact thing stated in this article.

Hello, we can confirm an MTU size of 8900 for the HPE MSA 2050, but for HPE MSA 2040 we can successfully use 9000 as MTU size. The Firmware we use is GL500P003 (an older one). Maybe MTU size of 9000 is allowed there?

Hi Marcy,

That’s entirely a possibility that old firmware versions may have a different MTU. Around the time I wrote that article, I was using the latest firmware at the time and tested and confirmed 8900 was the maximum.

When I have time, I’ll do some additional testing on the latest version to see if that is still the case.

Cheers, and thanks for the info!

Stephen

Correction, the Firmware we use is GL105P003 on the HPE MSA 2040. Sorry!

Ok, using vmkping -d -s 8972 perfectly works with the HPE MSA 2040 and Firmware GL105P003, on our HPE MSA 2050 and Firmware VL100P001 only vmkping -d -s 8872 works.

Furthermore we have a Windows 2012 R2 Server (HPE DL380 Gen9) as a Veeam B&R server with an HPE FlexFabric 10Gb 2-port 534FLR-SFP+ Adapter directly connected to the HPE MSA 2050 and under the Advanced tab, the Jumbo Packet size only allows 9614, 9014, 4088 and 1514 and I really wonder, if 4088 is supposed to be used, as the MTU size of the HPE MSA2040 is only 8900.

deniz, I guess this is problem , because nic with tso and configured to standard mtu 9000 will produce large fragments, and, I guess, they will be handled by processor on HPE MSA side

Form HPE Manual msa 2040 best practices

Jumbo frames:

A normal Ethernet frame can contain 1500 bytes whereas a jumbo frame can contain a maximum of 9000 bytes for larger data transfers. The

MSA reserves some of this frame size; the current maximum frame size is 1400 for a normal frame and 8900 for a jumbo frame. This frame

maximum can change without notification. If you are using jumbo frames, make sure to enable jumbo frames on all network components in the

data path.

Regards,

Edoardo

Hello Stephen, great article!

Now I’ve configured on both esx hosts this parameters:

– vSwitch for iSCSI = 8900 MTU

– iSCSI-1 (vmk1/vmnic4 ) = 8900 MTU

– iSCSI-2 (vmk2/vmnic5 ) = 8900 MTU

vmkping -d -s 8872 (receive response OK)

MSA 1040 (firmware GL220P008)

4 Disks SAS 4TB 7.2k in RAID 10

Servers HP ML350-G9 – ESX 6.5.0-5310538

Host Adapter Intel 10Gbit 82599 (ixgbe driver 4.5.2)

I suppose that performance are not so good as desired – File Size 1GB for testing

Throughput Read 537.00 MB/sec

Throughput Write 388.00 MB/sec

Latency Read: 79.78 ms

Latency Write: 10.52 ms

Is there a way to check if there are no fragmented packets as you described?

Is there a way to check if the MSA is negotiating the Jumbo frames at the actual size of 8900?

Any other suggestion?

Thanks so much

Paolo

Thank you for this post, I found it quite helpful when setting up Jumbo Frames with out new MSA 1050. When running through this ping test I was only able to get a response once I lowered the data to 8858 bytes. Does that means the MSA 1050 has an effective MTU of 8886? Seems like an odd number but since that’s the largest frame I could get I ping response to I’m setting my MTU to 8886 and seeing how things go

Sounds like the issue happens on the 1050 as well. Thanks for posting! 🙂

Hi Stephen,

I am using Cisco Nexus switches between the hosts and MSA2050. We set the MTU on the switches also to 8900. Hosts have 8900 on the vswitches and network cards. Still the storage drops connection on random ports with the hosts.

BR,

Radu

Hi Radu,

I’d recommend setting the switches to 9000 or higher. If you’re using VLANs they may be using additional bytes for VLAN tagging. I’d recommend only setting the hosts to match the SAN.

Also, this post was for the MSA2040, so I might recommend doing some testing or review documentation to see if the same issue is still occurring on the new 2050.

Cheers,

Stephen

Hi Stephen,

I see you mentioned “I went ahead and re-configured my ESXi hosts to use an MTU of 8900”.

You set it on the vswitches and vmkernel adapters to 8900 or just on the vswitches ?

Thank you in advance,

Radu

Hi Radu,

On my VDS switches (in your case vSwitch), I set the switch for an MTU of 9000, but I set the iSCSI vmk adapter to 8900 to reflect the expected 8900MTU value that the MSA storage arrays use.

Cheers,

Stephen