When it comes to virtualized workloads, one thing I commonly see overlooked in the design of the solution, is the placement of workloads. In this post, I want to cover VMware vSphere VM placement rules using the “VM/Host Rules” feature.

This is a feature that I commonly see overlooked and not configured, especially in smaller single cluster environments, however I’ve also seen this happen in very large scale environments as well.

Let’s cover the why, what, who, and how…

VM Workloads

While VMware vSphere does have a number of technologies built in for redundancy, load-balancing, and availability, as part of the larger solution we often find our workloads, specifically 3rd party platforms, with their own solutions that accomplish the same thing.

We need to identify which HA (High Availability) or redundancy solution to use, based on the application, service, and how it works.

For example, using VMware vSphere HA, or High Availability, if vCenter (and/or vCLS) detects a host goes offline, it can restart the workload on other online hosts. There is time associated with the detection and boot time, resulting in a loss of service during this period.

Third party solutions often have their own high availability or redundancy built in to the solution, such as Microsoft Active Directory. In this case with a standard configuration, at any time, any domain controller can respond to a clients request for resources. If one DC goes offline, other DCs can respond to the request resulting in no downtime.

Obviously, in the case of Active Directory Domain Controllers, you’d much prefer to have multiple DCs in your environment, instead of using one with vSphere HA.

Additionally, if you did have multiple domain controllers, you’d want to make sure they aren’t all placed on the same ESXi host. This is where we start to incorporate VM placement in to our solution.

VM Placement

When it comes to 3rd party solutions like mentioned above, we need to identify these workloads and factor them in to the design of the solution we are either implementing, maintaining, or improving.

Example of VM workloads used with VM Placement

A few examples of these workloads with their own load-balancing and availability technologies:

- Microsoft Windows Active Directory Domain Controllers

- Microsoft Windows Servers running DNS/DHCP Servers

- Virtualized Active/Active or Active/Passive Firewall Appliances

- VMware Horizon UAG (Unified Access Gateway) configured in HA mode

- Other servers/services that have their own availability systems

As you can see, the applications all have their own special solution for availability, so we must insure the different “nodes” or “instances” are running on different ESXi hosts to avoid a host failure bringing down the entire solution.

Unless otherwise specified by the 3rd party vendor, I would recommend using VM/Host Rules in combination with vSphere DRS and HA.

Configuring VM Placement with VM/Host Rules

To configure these rules, follow the instructions below:

- Log on to your VMware vCenter Server

- Select a Cluster

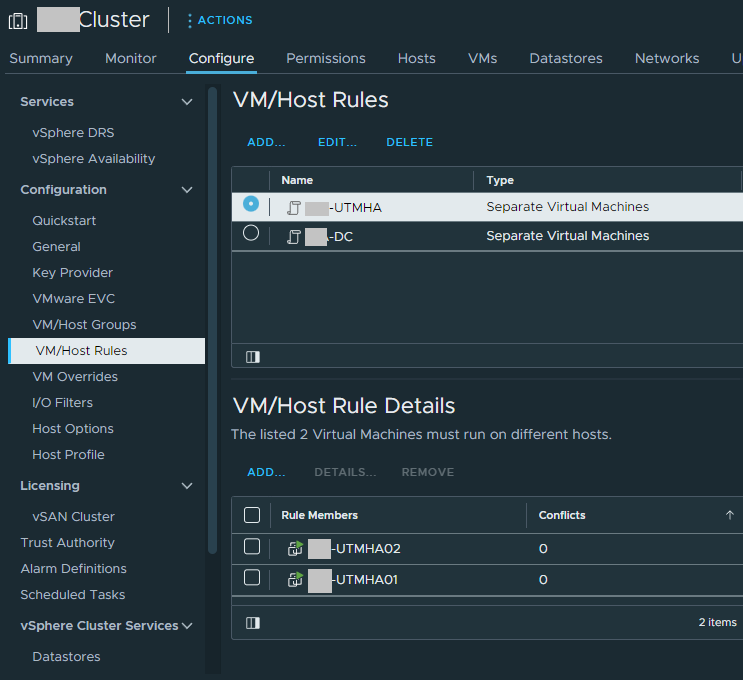

- Click on the “Configure” tab, and then “VM/Host Rules”

- Here you can Add/Edit/Delete VM Host Rules

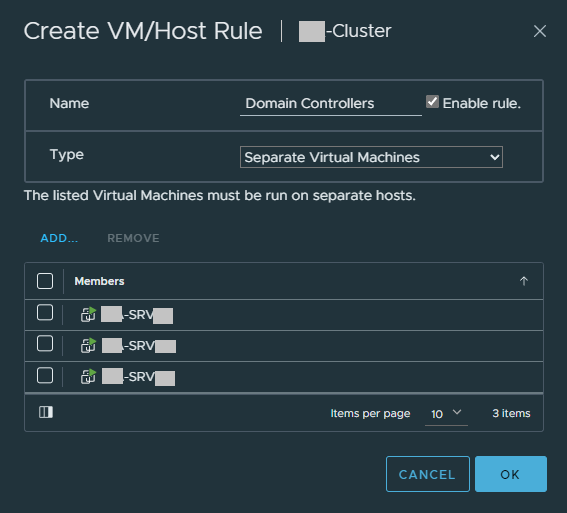

- Click on “Add”, and give the rule a new name (Example: Domain Controllers)

- For “Type”, select “Separate Virtual Machines”

- Click “Add” and select your Domain Controllers and add them to the rule.

After you click “OK”, the rule should now be saved, and DRS will make sure these VMs are now running on separate hosts.

Below you can see another example of a configured system, separating 2 Active/Passive Firewall appliances.

As you can see, VM placement with VM/Host Rules is very easy to configure and deploy.

Additional Considerations

Note, if you implement these rules and do not have enough hosts to fullfill the requirements, the hosts may fail to be evacuated by DRS when placing in maintenance mode, or remediating with vLCM (Lifecycle Manager).

In this case, you’ll need to manually vMotion the VM’s to other hosts (to violate the rule) or shut some down.