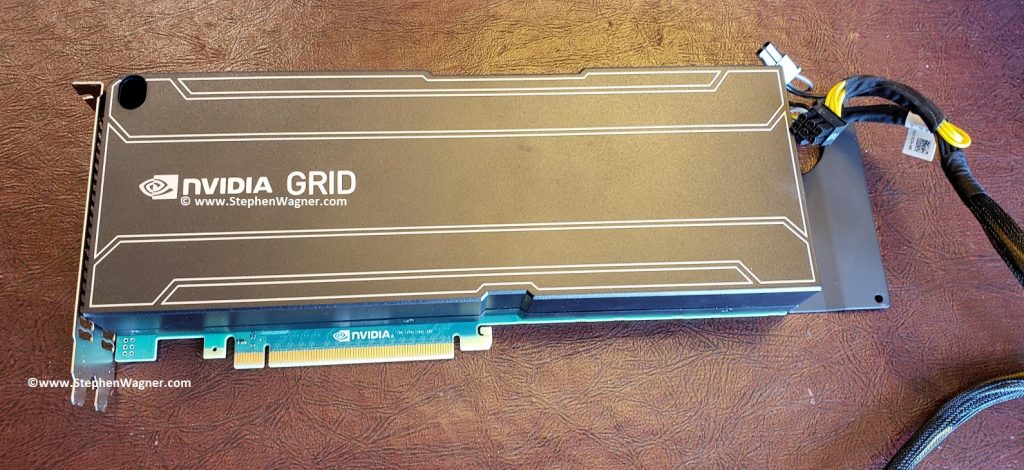

I can’t tell you how excited I am that after many years, I’ve finally gotten my hands on and purchased an Nvidia Quadro K1 GPU. This card will be used in my homelab to learn, and demo Nvidia GRID accelerated graphics on VMware Horizon View. In this post I’ll outline the details, installation, configuration, and thoughts. And of course I’ll have plenty of pictures below!

The focus will be to use this card both with vGPU, as well as 3D accelerated vSGA inside in an HPE server running ESXi 6.5 and VMware Horizon View 7.8.

Please Note: As of late (late 2020), hardware h.264 offloading no longer functions with VMware Horizon and VMware BLAST with NVidia Grid K1/K2 cards. More information can be found at https://www.stephenwagner.com/2020/10/10/nvidia-vgpu-grid-k1-k2-no-h264-session-encoding-offload/

Please Note: Some, most, or all of what I’m doing is not officially supported by Nvidia, HPE, and/or VMware. I am simply doing this to learn and demo, and there was a real possibility that it may not have worked since I’m not following the vendor HCL (Hardware Compatibility lists). If you attempt to do this, or something similar, you do so at your own risk.

For some time I’ve been trying to source either an Nvidia GRID K1/K2 or an AMD FirePro S7150 to get started with a simple homelab/demo environment. One of the reasons for the time it took was I didn’t want to spend too much on it, especially with the chances it may not even work.

Essentially, I have 3 Servers:

- HPE DL360p Gen8 (Dual Proc, 128GB RAM)

- HPE DL360p Gen8 (Dual Proc, 128GB RAM)

- HPE ML310e Gen8 v2 (Single Proc, 32GB RAM)

For the DL360p servers, while the servers are beefy enough, have enough power (dual redundant power supplies), and resources, unfortunately the PCIe slots are half-height. In order for me to use a dual-height card, I’d need to rig something up to have an eGPU (external GPU) outside of the server.

As for the ML310e, it’s an entry level tower server. While it does support dual-height (dual slot) PCIe cards, it only has a single 350W power supply, misses some fancy server technologies (I’ve had issues with VT-d, etc), and only a single processor. I should be able to install the card, however I’m worried about powering it (it has no 6pin PCIe power connector), and having ESXi be able to use it.

Finally, I was worried about cooling. The GRID K1 and GRID K2 are typically passively cooled and meant to be installed in to rack servers with fans running at jet engine speeds. If I used the DL360p with an external setup, this would cause issues. If I used the ML310e internally, I had significant doubts that cooling would be enough. The ML310e did have the plastic air baffles, but only had one fan for the expansion cards area, and of course not all the air would pass through the GRID K1 card.

The Purchase

Because of a limited budget, and the possibility I may not even be able to get it working, I didn’t want to spend too much. I found an eBay user local in my city who had a couple Grid K1 and Grid K2 cards, as well as a bunch of other cool stuff.

We spoke and he decided to give me a wicked deal on the Grid K1 card. I thought this was a fantastic idea as the power requirements were significantly less (more likely to work on the ML310e) on the K1 card at 130 W max power, versus the K2 card at 225 W max power.

The above chart is a capture from:

https://www.nvidia.com/content/cloud-computing/pdf/nvidia-grid-datasheet-k1-k2.pdf

We set a time and a place to meet. Preemptively I ran out to a local supply store to purchase an LP4 power adapter splitter, as well as a LP4 to 6pin PCIe power adapter. There were no available power connectors inside of the ML310e server so this was needed. I still thought the chances of this working were slim…

These are the adapters I purchased:

- StarTech LP4 to 2x LP4 Power Y Splitter Cable M/F

- StarTech 6in LP4 to 6 Pin PCI Express Video Card Power Cable Adapter

Preparation and Software Installation

I also decided to go ahead and download the Nvidia GRID Software Package. This includes the release notes, user guide, ESXi vib driver (includes vSGA, vGPU), as well as guest drivers for vGPU and pass through. The package also includes the GRID vGPU Manager. The driver I used was from:

https://www.nvidia.com/Download/driverResults.aspx/144909/en-us

To install, I copied over the vib file “NVIDIA-vGPU-kepler-VMware_ESXi_6.5_Host_Driver_367.130-1OEM.650.0.0.4598673.vib” to a datastore, enabled SSH, and then ran the following command to install:

esxcli software vib install -v /path/to/file/NVIDIA-vGPU-kepler-VMware_ESXi_6.5_Host_Driver_367.130-1OEM.650.0.0.4598673.vib

The command completed successfully and I shut down the host. Now I waited to meet.

We finally met and the transaction went smooth in a parking lot (people were staring at us as I handed him cash, and he handed me a big brick of something folded inside of grey static wrap). The card looked like it was in beautiful shape, and we had a good but brief chat. I’ll definitely be purchasing some more hardware from him.

Hardware Installation

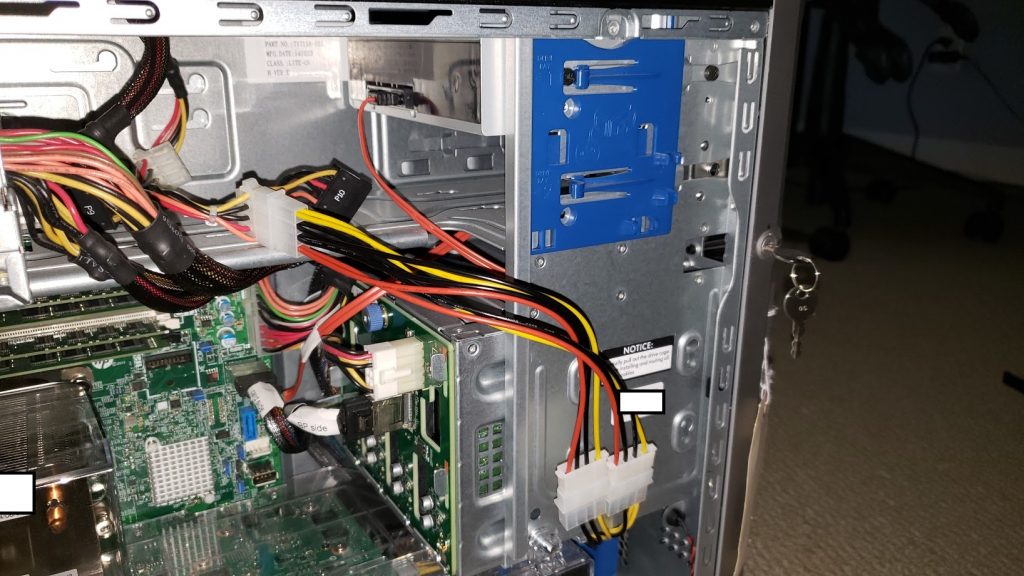

Installing the card in the ML310e was difficult and took some time with care. First I had to remove the plastic air baffle. Then I had issues getting it inside of the case as the back bracket was 1cm too long to be able to put the card in. I had to finesse and slide in on and angle but finally got it installed. The back bracket (front side of case) on the other side slid in to the blue plastic case bracket. This was nice as the ML310e was designed for extremely long PCIe expansion cards and has a bracket on the front side of the case to help support and hold the card up as well.

For power I disconnected the DVD-ROM (who uses those anyways, right?), and connected the LP5 splitter and the LP5 to 6pin power adapter. I finally hooked it up to the card.

I laid the cables out nicely and then re-installed the air baffle. Everything was snug and tight.

Please see below for pictures of the Nvidia GRID K1 installed in the ML310e Gen8 V2.

Host Configuration

Powering on the server was a tense moment for me. A few things could have happened:

- Server won’t power on

- Server would power on but hang & report health alert

- Nvidia GRID card could overheat

- Nvidia GRID card could overheat and become damaged

- Nvidia GRID card could overheat and catch fire

- Server would boot but not recognize the card

- Server would boot, recognize the card, but not work

- Server would boot, recognize the card, and work

With great suspense, the server powered on as per normal. No errors or health alerts were presented.

I logged in to iLo on the server, and watched the server perform a BIOS POST, and start it’s boot to ESXi. Everything was looking well and normal.

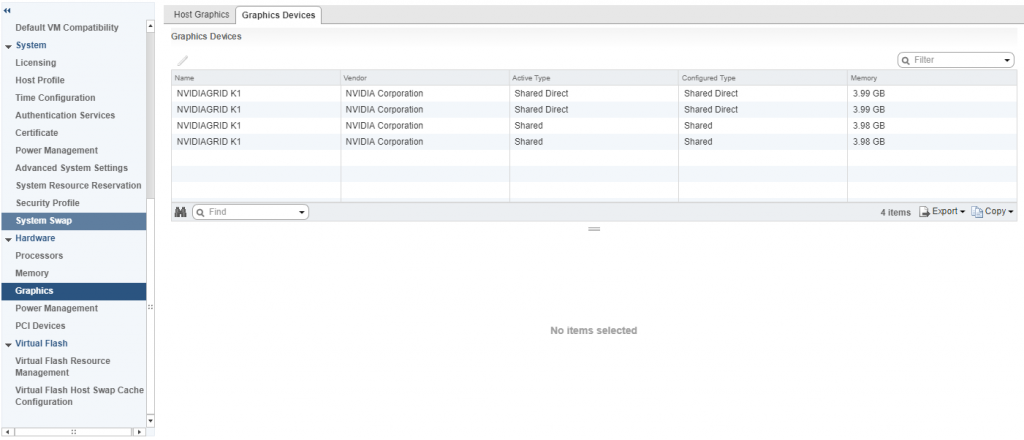

After ESXi booted, and the server came online in vCenter. I went to the server and confirmed the GRID K1 was detected. I went ahead and configured 2 GPUs for vGPU, and 2 GPUs for 3D vSGA.

VM Configuration

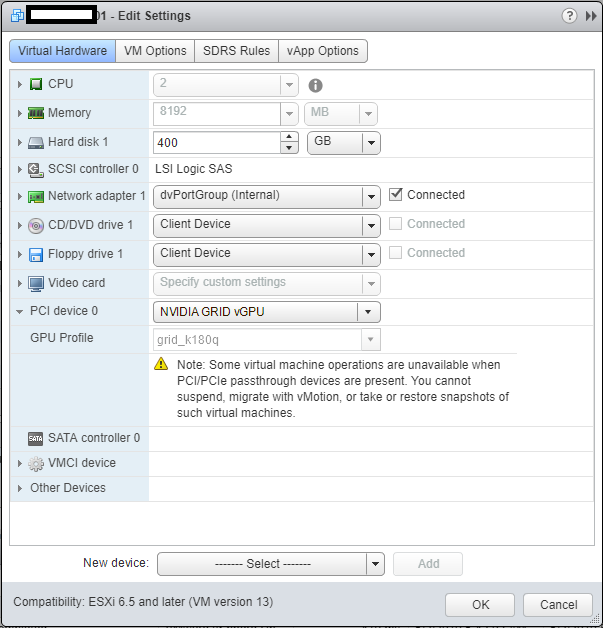

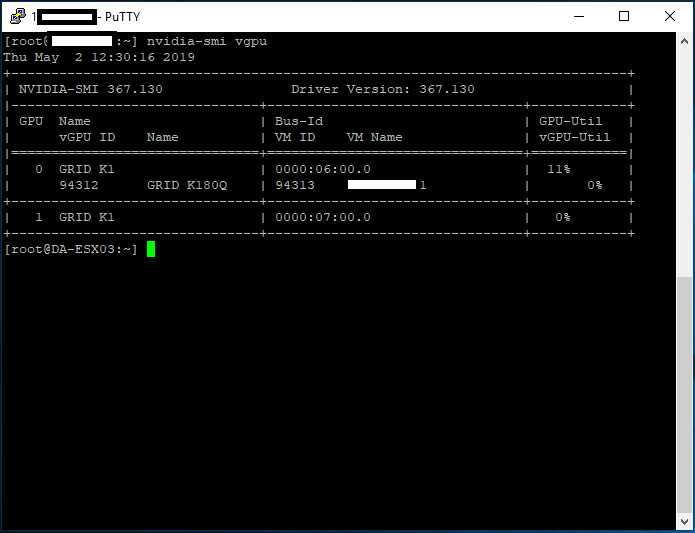

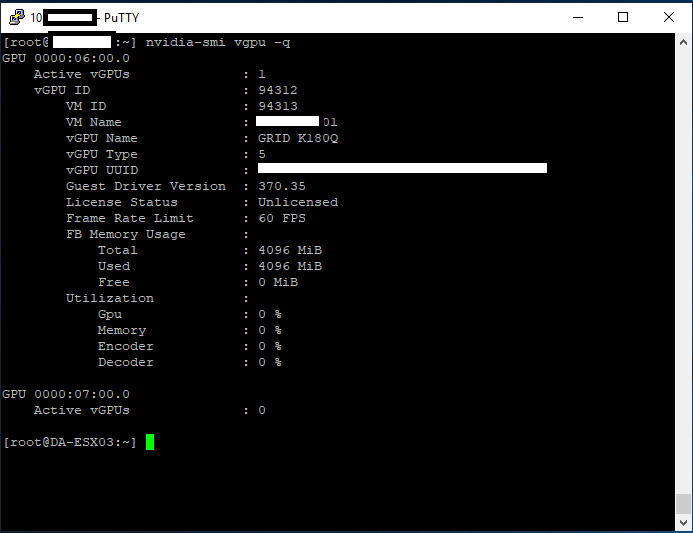

I restarted the X.org service (required when changing the options above), and proceeded to add a vGPU to a virtual machine I already had configured and was using for VDI. You do this by adding a “Shared PCI Device”, selecting “NVIDIA GRID vGPU”, and I chose to use the highest profile available on the K1 card called “grid_k180q”.

After adding and selecting ok, you should see a warning telling you that must allocate and reserve all resources for the virtual machine, click “ok” and continue.

Power On and Testing

I went ahead and powered on the VM. I used the vSphere VM console to install the Nvidia GRID driver package (included in the driver ZIP file downloaded earlier) on the guest. I then restarted the guest.

After restarting, I logged in via Horizon, and could instantly tell it was working. Next step was to disable the VMware vSGA Display Adapter in the “Device Manager” and restart the host again.

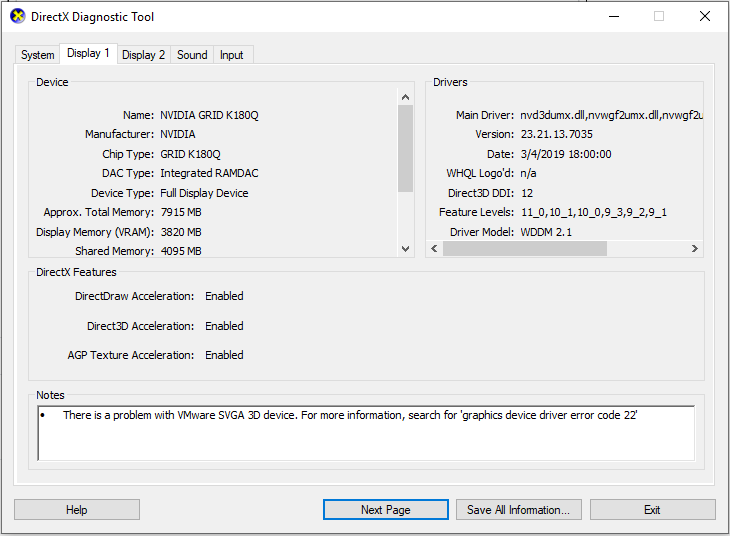

Upon restarting again, to see if I had full 3D acceleration, I opened DirectX diagnostics by clicking on “Start” -> “Run” -> “dxdiag”.

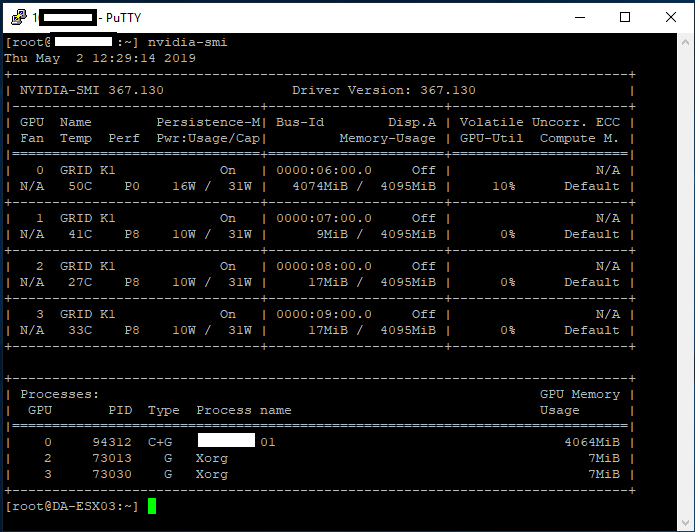

It worked! Now it was time to check the temperature of the card to make sure nothing was overheating. I enabled SSH on the ESXi host, logged in, and ran the “nvidia-smi” command.

According to this, the different GPUs ranged from 33C to 50C which was PERFECT! Further testing under stress, and I haven’t gotten a core to go above 56. The ML310e still has an option in the BIOS to increase fan speed, which I may test in the future if the temps get higher.

With “nvidia-smi” you can see the 4 GPUs, power usage, temperatures, memory usage, GPU utilization, and processes. This is the main GPU manager for the card. There are some other flags you can use for relevant information.

Final Thoughts

Overall I’m very impressed, and it’s working great. While I haven’t tested any games, it’s working perfect for videos, music, YouTube, and multi-monitor support on my 10ZiG 5948qv. I’m using 2 displays with both running at 1920×1080 for resolution.

I’m looking forward to doing some tests with this VM while continuing to use vGPU. I will also be doing some testing utilizing 3D Accelerated vSGA.

The two coolest parts of this project are:

- 3D Acceleration and Hardware h.264 Encoding on VMware Horizon

- Getting a GRID K1 working on an HPE ML310e Gen8 v2

Highly recommend getting a setup like this for your own homelab!

Uses and Projects

Well, I’m writing this “Uses and Projects” section after I wrote the original article (it’s now March 8th, 2020). I have to say I couldn’t be impressed more with this setup, using it as my daily driver.

Since I’ve set this up, I’ve used it remotely while on airplanes, working while travelling, even for video editing.

Some of the projects (and posts) I’ve done, can be found here:

Leave a comment and let me know what you think! Or leave a question!

Good stuff.

I have a couple of Quadro K4000 in a DL380p running EXSI 6.5 U3.

Looking forward to see the testing utilizing 3D Accelerated vSGA.

Just out of curiosity, do you have the K4000 cards working with VMware Horizon? vGPU and VSGA?

Good article thanks, Stephen.

Im running 2 esxi 6.7u3 hosts and horizon 7.9 along with VSAN in my home lab (all licensing purchased through VMUG Advantage). I bought a K2 off ebay for $150 without doing my homework. Before today I had good luck getting the vib file installed on the host with the K2 installed in it. Ive made the shared direct host config changes, rebooted the host… I can then edit the setti gs of any guest windows OS on the host with the K2 and choose any of the available profiles. Everything seems to work as it should only I’m unable to boot the guest operating systems once configured to use the K2 at this point. Some kernel message in venter comes up… Google isn’t very helpful. It would seem as though if my host was 6.5 and not 6.7 it would probably work as intended. Did you try a more recent version of esxi first before figuring out 6.5 was the key? Thanks for documenting your journey…

Hi David,

So were you able to successfully install the NVidia vGPU drivers on 6.7? Also, are you passing through the entire card, or a vGPU profile via the Shared PCI device?

To be honest, I’m not sure if you’ll be able to get it working. AFAIK the drivers are only compatible with 6.5. But definitely keep me posted on your progress!

Cheers,

Stephen

Hei Stephen,

Ya… I installed the latest version of the K2 drivers without issue. I also changed the graphics to shared direct on the host. It detected the K2 just fine (running nvidia-smi on the host using ssh comes back looking good). I’m not doing any pcie pass-through on the host. It’s possible that the driver incompatibility with 6.7, as you pointed out, is what’s causing it to bomb out as soon as I power on any guest configured to use any of the K2 profiles. I stumbled on your blog searching the inner webs for some clues on what might be going on. I bet it’s just an incompatibility issue with esxi 6.7. I suppose I will add another host to the lab running 6.5 to see if I have any better luck. If not… I may just sit on some ebay auctions for a currently supported Nvidia grid card to play with (p100 perhaps). I’ll update on my progress getting things working.

Thanks!

David

HI David,

Just remember that the newer Nvidia cards require licensing for vGPU!

Keep us posted on how you make out though!

Cheers

[…] there’s a happy ending to this story, my Nvidia Grid K1 and VDI environment saved the […]

I have a GTX 1050 in my Cisco C240-M3 server that’s passed through in ESXi 6.7 to a Win10 VM that I connect to via Horizon 7.12. My desktop computer runs 3x 1080p monitors, and I’m looking to move my daily driver from my physical desktop to my VDI.

I’ve been contemplating getting a Grid K1 or K2 for this, but have been testing with the 1050 for now. Although GPU passthrough works as I’d expect, desktop performance in the VDI isn’t anywhere close to my physical desktop experience. I’ve made some optimizations with the VMware Blast GPOs, but video playback is noticeably degraded compared to my physical desktop. Both my desktop and VDI are on the same network (with Horizon infrastructure servers being on different networks), and I’m utilizing this strictly locally for the time being.

What is your experience like with video playback, even with things like Youtube or otherwise? Is your VDI experience as smooth as your physical desktop experience? If so, what optimizations have you made, or is it strictly the Grid card that’s what I’m missing to get more VDI performance than my higher performing GTX 1050?

Thanks!

Hey Mike,

Great questions! So in my environment everything runs like butter! Everything is smooth, no issues, etc… I regularly watch YouTube, I edit 1080p and 4k video and love it.

I use a 10ZiG Zero Client (I have a couple scattered throughout my house) to connect and it’s great!

First and foremost, keep in mind that the GRID K1 and GRID K2 cards are extremely old… So gaming performance might not be up to your standard. Also keep in mind that if using Linux or a Thin/Zero client that runs on Linux, that relative mouse does not work with the Linux version of the Horizon client.

The only issues I experience are with some video overlays. When the video starts to play, the graphical session freezes. This only happened in the last 4 months so it’s either related to a driver issue, or an ESXi update.

Your performance issues are most likely due to the fact you don’t have a GPU designed for VDI. I have a feeling that your CPU is encoding the h.264 stream instead of being offloaded to the card.

There are other alternatives though, for example I just ordered an AMD S7150 x2 which I should receive in the next few weeks. Newer card and higher performing. You might find these on Ebay!

Let me know if I answered your questions or if you have any more!

Cheers,

Stephen

Thanks, Stephen! I greatly appreciate the reply. I was worried about the age of the K1/2 cards, but I figured it’d be better than paying for the licensing needed for newer cards. I’m not a gamer at all, but bought the card from a friend initially for transcoding on my server, but decided to switch gears and use it for VDI.

For my desktop, I use a Dell Optiplex 7060 Micro, so it should perform better than a thin client.

Do you know of a way for the h.264 stream to be computed by the GPU instead of CPU? I’ll look into that now. Those AMD S7150 cards look rather impressive. No licensing required for them to run in VDI?

Hey Mike,

My pleasure!

Essentially when it comes to VDI, there’s two ways to do it “properly”.

1) No 3D acceleration – In these environments there’s no 3d acceleration, and the PCoIP and/or h.264 blast session is encoded on the CPU of the host. Usually in these deployments, there’s heavy modification to the guest OS to disable as much graphical aspects as possible to only provide the user what’s required for daily computing requirements.

2) 3D acceleration – In this case, a 3D acceleration card is used. You can’t just use any, but it has to be supported by VMware Horizon. In a properly functioning and working environment, the GPU both providers 3D acceleration, as well as H264 encoding (for the BLAST session). This allows the CPU to be used strictly for CPU stuff (much like your desktop computer).

A few (5 or 6) minor versions ago, you wouldn’t have been able to use a non certified GPU, and you’d simply get a black screen, however 5 or 6 versions back VMware allowed the installation of the Horizon agent on physical PCs, so now technically hacking a consumer GPU in to the VM could work, but acceleration is limited and the GPU will not encode the h.264 stream. Info can be found here on this: https://www.stephenwagner.com/2019/05/01/install-vmware-horizon-agent-on-physical-pc/ . I think this is the mechanism that has been allowing you to use your own card, where traditionally it wouldn’t have worked. This is another reason why your performance isn’t the best.

Also keep in mind there’s a bunch of requirements for proper 3D acceleration as far as maximum number of displays, resolutions, etc… Once you exceed these, certain components (such as h.264 encoding) will fall back from the GPU to the CPU.

For example, my Zero client supports 3 displays at super high resolution, however if I use those maximums, they exceed the BLAST and Horizon maximums for display count and max resolution for proper 3D acceleration and h.264 encoding. I had to resort to only using 2 displays and a max resolution of 1920×1080 on both of them.

One final comment, the GRID K1 card has been an absolute delight as far as a daily driver goes. I’m only using ¼ of the cards capabilities using the K180 profile. The K2 performs higher but doesn’t allow as many concurrent users.

I’m excited to get my hands on the AMD FirePro S7150 x2 MxGPU, as this is another certified card. It uses a different mechanism than the Nvidia card (Nvidia uses vGPU, AMD uses MxGPU). MxGPU uses SR-IOV for shared passthrough, however since my server doesn’t support it I’m hoping to pass through an entire GPU (1 of 2 GPUs) directly to the VM without using SR-IOV.

I hope this clears up some more stuff!

Cheers,

Stephen

Thanks again, Stephen. I appreciate the help and quick response! I’ll keep investigating and testing on my end.

I’m now looking at the S9150 cards (strictly due to the price point on eBay, and the lack of availability of the S7150 non-x2 cards) and might give one of those a shot. Bringing my desktop session down to only 2x 1080p monitors would a problem for me, but it may be worth a shot with that card or a K2, whichever will fit in my Cisco, to see what kind of performance I can get on 3x 1080p displays.

Mike

Hi Mike,

I just checked the HCL, and can’t find the AMD S9150 on the list so I would recommend not purchasing that card because you’ll probably be in the same boat as you are now (using CPU encoding) only with a worse card.

The display limit might change depending on the card. I’m going to do some testing with the S7150 x2 and I’ll let you know what I can push it to!

I’ll be doing a big writeup and a video on my YouTube channel on the S7150 x2 once I get it.

Stephen

Well I’m glad you looked! Thanks, Stephen! I look forward to it!

Also looking forward to a post about the S7150x2. I recently purchased one and have been attempting to get it running in passthrough (not MxPGU) on ESXi 6.5 with a Linux Horizon guest. Have attempted to get the amdgpu-pro drivers working on several guests so far, but am unable to get the display to work after installing the drivers and attaching one of the S7150x2 GPUs. Have attempted Centos 7.7, Centos 8.1, and Ubuntu 18.04.2 without any luck.

Hi Allen,

What kind of issues are you having? I haven’t done much with Linux guests with the exception of vSGA. But I did see that they have drivers for Linux, so technically the S7150 X2 should be working?

Are you having PCI passthrough issues with the physical host?

Stephen

Hi Stephen,

I was originally having issues configuring the MxGPU Virtual Functions on my ESXi 6.5 server running on a dell R720. The setup script (mxgpu-install.sh) that is supposed to create the Virtual Functions through the CLI successfully completes and states the VFs will be available after reboot, but the VFs do not show up upon reboot. (SR-IOV and Intel VT is enabled in the BIOS)

Digging a bit deeper I find the following in DMSEG on ESXi after reboot shows:

“””

amdgpuv_log: amdgpuv_pci_gpuiov_init:101: [amdgpuv]: initialize gpu iov capability successfully

PCI: 176: Base address register is of length 0

PCI: 786: Unable to allocate 0x20000000 bytes in pre fetch mmio for PF=0000:07:00.0 VF-BAR[0]

PCI: 866: Failed to allocate and program VF BARs on 0000:07:00.0

WARNING: PCI: 1470: Enable VFs failed for device @0000:07:00.0, Please make sure the system has proper BIOS installed and enabled for SRIOV.

“””

So I decided that it would be easier to just pass the GPU directly through and avoid the MxGPU VFs since I didn’t plan on using many guests.

The passthrough seems to pass the GPU just fine to the linux guest with the card showing up in the ‘lspci’ output. It just seems that the driver install reconfigures the display in a way that I am unable to determine the next proper step. vSphere console no longer works, vnc changes to displaying a black screen, and installation of the horizon agent does not provide a view into the systems either.

Next on my list is to attempt PCI passthrough of the card with a Windows Guest.

Allen

Hi Allen,

Interesting… I’d definitely try passing through to a Windows VM, this way you can verify the GPU hasn’t been fried, damaged, or overheated to the point where it’s not longer working.

Also, (I’ve had this issue) some systems don’t pass through the PCIe cards nicely or properly. On one of my systems I had similar issue doing passthrough with a simple small Quadro card. What type of server, motherboard and CPU are you using?

Cheers,

Stephen

Hi Stephen,

Successfully passed through the GPU to a Windows 10 guest, installed the drivers, and got Horizon up and running fairly quickly. Works great so far! Feels much more smooth than the Nvidia K2 I used previously with VGPU.

Server I’m using is a Dell Poweredge R720, with dual E5-2680 v2 2.80GHz processors.

Still hoping to get a Linux guest working, so my next step may be to find some older AMD drivers for Linux and see if I have any luck with those.

Allen

I’m glad to hear it worked. This is completely unrelated, but could you do me a favor and test a YouTube and MP4 video in the Horizon session? I’ve heard the S7150x2 doesn’t provide h.264 inside of sessions but I haven’t had the chance to test it myself yet (waiting on more hardware).

As for the Linux guest. Have you applied the recommended VM advanced settings for the VM as per their deployment guide? These include pciHole start end end parameters.

[…] you’re like me and use an older Nvidia GRID K1 or K2 vGPU video card for your VDI homelab, you may notice that when using VMware Horizon that VMware Blast h264 encoding is no longer being […]

[…] decided to repurpose an HPE Proliant ML310e Gen8 v2 Server. This server was originally acting as my Nvidia Grid K1 VDI server, because it supported large PCIe cards. With the addition of my new AMD S7150 x2 hacked in/on to […]

[…] do have a HPE ML310e Gen8 v2 server that had an NVidia Grid K1 card which can physically fit and cool the S7150 x2, however it’s an entry-level server and […]

Hello Stephen, I use a translator because I don’t understand any English, I just read several of your posts, especially the one about the Nvidia Grid K1 on the Proilant MLm10e V8, this is because I bought this server almost as a gift for my interest in having my own movie station at home, the detail is that I’m not a programmer and the issue of servers is new to me. The good thing is that something understands from everything I read.

Due to your high knowledge, you may know the plex application that turns your computer into a multimedia server, I used it on my personal computer but I want to see if it is possible to run it on this Proilant server, and the question arises because it does not transcode unless I install a card like the Nvidia you name in your pos.

But then I saw in another post of yours (https://www.stephenwagner.com/2020/10/10/nvidia-vgpu-grid-k1-k2-no-h264-session-encoding-offload/) in which you explain that There is a problem with the encoding but then you reply to someone that there is no problem with the transcoding.

I think that it will work for me just as a workstation since I am a graphic designer and for what you used it I think it would be great, I would only run software like photoshot and the ADOBE family.

Now come my questions:

1. Is the equipment I bought very obsolete with what I want to do?

2. Will transcoding help me to be able to use plex and watch movies on different devices (PS4, Smartv, Tablet)?

3. And can I run all this with windows server and necessarily the virtual machine of some windows?

I would be very grateful if you could answer, otherwise I will understand that what I want to do is not very important either, so I don’t expect you to waste your time with this type of query. However, thank you in advance that you have taken the time to read.

Hi Ricardo,

Thanks for the questions. The GRID K1 card is very old and isn’t supported anymore. Drivers are not actively being developed and the likely hood of getting the card to function are slim. I’d recommend using a different GPU instead.

Hope that helps!