Today I’m going to show you the process to install Horizon Agent for Linux on Ubuntu 22.04 LTS. We’ll be installing the Horizon Agent for Linux from VMware Horizon 8 version 2209.

The official documentation from VMware is helpful, but unfortunately doesn’t provide all the information to get up and running quickly, which is why I’ve put together this guide as a “Quick Start”.

Please note, that this is just a guide to get to the point where you can install NVIDIA vGPU drivers and have installed the Horizon Agent for Linux on the VM. This will provide you with a persistent VM that you can use with Horizon, and the instructions can be adapted for use in a non-persistent instant clone environment as well.

I highly recommend reading VMware’s documentation for Linux Desktops and Applications in Horizon.

Requirements

- VMware Horizon 8 (I’m running VMware Horizon 8 2209)

- Horizon Enterprise or Horizon for Linux Licensing

- Ubuntu 22.04 LTS Installer ISO (download here)

- Horizon Agent for Linux (download here)

- Functioning internal DNS

Instructions

- Create a VM on your vCenter Server, attached the Ubuntu 22.04 LTS ISO, and install Ubuntu

- Install any Root CA’s or modifications you need for network access (usually not needed unless you’re on an enterprise network)

- Update Ubuntu as root

apt update

apt upgrade

reboot - Install software needed for VMware Horizon Agent for Linux as root

apt install make gcc libglvnd-dev open-vm-tools open-vm-tools-dev open-vm-tools-desktop - Install your software (Chrome, etc.)

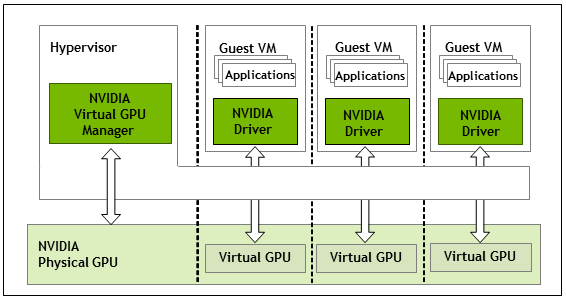

- Install NVIDIA vGPU drivers if you are using NVIDIA vGPU (this must be performed before install the Horizon Agent). Make sure the installer modifies and configures the X configuration files.

- Install the Horizon Agent For Linux as root (accepting TOS, enabling audio, and disabling SSO).

See Command-line Options for Installing Horizon Agent for Linux./install_viewagent.sh -A yes -a yes -S no - Reboot the Ubuntu VM

- Log on to your Horizon Connection Server

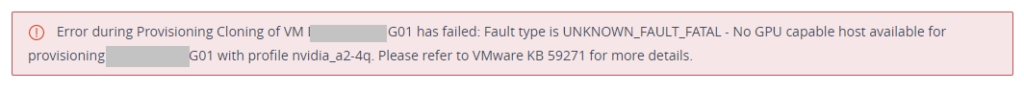

- Create a manual pool and configure it

- Add the Ubuntu 22.04 LTS VM to the manual desktop pool

- Entitle the User account to the desktop pool and assign to the VM

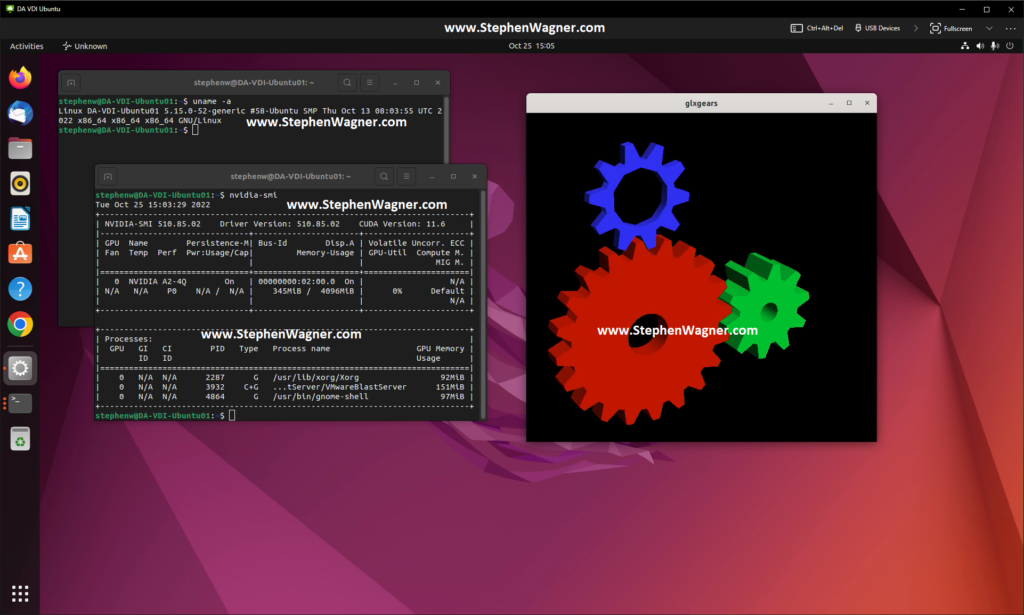

- Connect to the Ubuntu 22.04 Linux VDI VM from the VMware Horizon Client

You should now be able to connect to the Ubuntu Linux VDI VM using the VMware Horizon client. Additionally, if you installed the vGPU drivers for NVIDIA vGPU, you should have full 3D acceleration and functionality.