Once I upgraded my Synology NAS to DSM 6.2 I started to experience frequent lockups and freezing on my DS1813+. The Synology DS1813+ would become unresponsive and I wouldn’t be able to SSH or use the web GUI to access it. In this state, NFS sometimes would become unresponsive.

When this occured, I would need to press and hold the power button to force it to shutdown, or pull the power. This is extremely risky as it can cause data corruption.

I’m currently running DSM 6.2.2-24922 Update 2.

The cause

This occurred for over a month until it started to interfere with ESXi hosts. I also noticed that the issue would occur when restarting any of my 3 ESXi hosts, and would definitely occur if I restarted more than one.

During the restarting, while logged in to the web GUI and SSH, I was able to see that the memory (RAM) usage would skyrocket. Finally the kernel would panic and attempt to reduce memory usage once the swap file had filled up (keep in mind my DS1813+ has 4GB of memory).

Analyzing “top” as well as looking at processes, I noticed the Synology index service was causing excessive memory and CPU usage. On a fresh boot of the NAS, it would consume over 500MB of memory.

The fix (Please scroll down and see updates)

In my case, I only use my Synology NAS for an NFS/iSCSI datastore for my ESXi environment, and do not use it for SMB (Samba/File Shares), so I don’t need the indexing service.

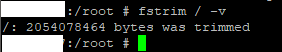

I went ahead and SSH’ed in to the unit, and ran the following commands to turn off the service. Please note, this needs to be run as root (use “sudo su” to elevate from admin to root).

synoservice --disable pkgctl-SynoFinder

While it did work, and the memory was instantly freed, the setting did not stay persistant on boot. To uninstalling the Indexing service, run the following command.

synopkg uninstall SynoFinder

Doing this resolved the issue and freed up tons of memory. The unit is now stable.

Update May 31st, 2020 – Increased Stability

After troubleshooting I noticed that the majority of stability issues would start occurring when ESXi hosts accessing NFS exports on the Synology diskstation are restarted.

I went ahead and stopped using NFS, started using iSCSI with MPIO, and the stability of the Synology NAS has greatly improved. I will continue to monitor this.

I still have plans to hack the Synology NAS and put my own OS on it.

Update May 2nd, 2020 – It’s still crashing, and really frustrating me

Today I had to restart my 3 ESXi hosts that are connected to the NFS export on the Synology Disk Station. After restarting the hosts, the Synology device has gone in to a lock-up state once again. It appears the issue is still present.

The device is responding to pings, and still provides access to SMB and NFS, but the web GUI, SSH, and console access is unresponsive.

I’m officially going to start planning on either retiring this device as this is unacceptable, especially in addition to all the issues over the years, or I may try an attempt at hacking the Synology Diskstation to run my own OS.

Update April 21st, 2020 – What I thought was the fix

After a few more serious crashes and lockups, I finally decided to do something about this. I went ahead and backed up my data, deleted the arrays, performed a factory reset on the Synology Disk Station. I also zero’d the metadata and MBR off all the drives.

I then configured the Synology NAS from scratch, used Btrfs (instead of ext4), restored the backups.

The NAS now appears to be running great and has not suffered any lockups or crashses since. I’ve also been noticing that memory management is working a lot better.

I have a feeling that this issue was caused due to the long term chaining of updates (numerous updates installed over time), or the use of the ext4 filesystem.

Update March 20th, 2020

As of March 2020 this issue is still occurring on numerous new firmware updates and version. I’ve tried reaching out to Synology on twitter directly a few times about this issue as well as e-mail (indirectly regarding something else) and have still not received or heard a response. As of this time the issue is still occurring on a regular basis on DSM 6.2.2-24922 Update 4. I’ve taken production and important workloads of the device since I can’t have the device continuously crashing or freezing overnight.

Update – August 16th, 2019

My Synology NAS has been stable since I applied the fix, however after an uptime of a few weeks, I noticed that when restarting servers, the memory usage does hike up (example, from 6% to 46%). However, with the fixes applied above, the unit is stable and no longer crashes.