For over a year and a half I have been working on building a custom NVMe Storage Server for my homelab. I wanted to build a high speed storage system similar to a NAS or SAN, backed with NVMe drives that provides iSCSI, NFS, and SMB Windows File Shares to my network.

The computers accessing the NVMe Storage Server would include VMware ESXi hosts, Raspberry Pi SBCs, and of course Windows Computers and Workstations.

The focus of this project is on high throughput (in the GB/sec) and IOPS.

The current plan for the storage environment is for video editing, as well as VDI VM storage. This can and will change as the project progresses.

The History

More and more businesses are using all-flash NVMe and SSD based storage systems, so I figured there’s no reason why I can’t have build and have my own budget custom all NVMe flash NAS.

This is the story of how I built my own NVMe based Storage Server.

The first version of the NVMe Storage Server consisted of the IO-PEX40152 card with 4 x 2TB Sabrent Rocket 4 NVMe drives inside of an HPE Proliant DL360p Gen8 Server. The server was running ESXi with TrueNAS virtualized, and the PCIe card passed through to the TrueNAS VM.

The results were great, the performance was amazing, and both servers had access to the NFS export via 2 x 10Gb SFP+ networking.

There were three main problems with this setup:

- Virtualized – Once a month I had an ESXi PSOD. This was either due to overheating of the IO-PEX40152 card because of modifications I made, or bugs with the DL360p servers and PCIe passthrough.

- NFS instead of iSCSI – Because TrueNAS was virtualized inside of the host that was using it for storage, I had to use NFS since the host virtualizing TrueNAS would also be accessing the data on the TrueNAS VM. When shutting down the host, you need to shut down TrueNAS first. NFS disconnects are handled way healthier than iSCSI disconnects (which can cause corruption even if no files are being used).

- CPU Cores maxed on data transfer – When doing initial testing, I was maxing out the CPU cores assigned to the TrueNAS VM because the data transfers were so high. I needed a CPU and setup that was better fit.

Version 1 went great, but you can see some things needed to be changed. I decided to go with a dedicated server, not virtualize TrueNAS, and go for a newer CPU with a higher Ghz speed.

And so, version 2 was born (built). Keep reading and scrolling for pictures!

The Hardware

On version 2 of the project, the hardware includes:

- HPE Prolaint ML310e Gen8 v2

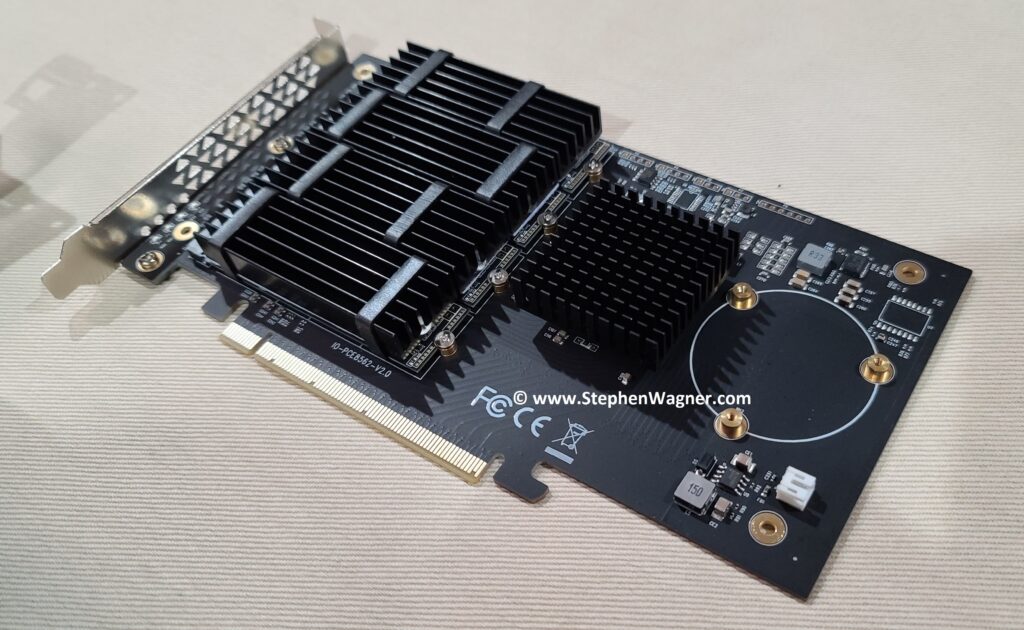

- IOCREST IO-PEX40152 Quad NVMe m.2 to PCIe 16x Card

- 4 x Sabrent Rocket 4 2TB NVMe Drives

- HPE Dual 10G Port 560SFP+ NIC

- 10Gb SFP+ DAC Cable

Notes on the Hardware:

- While the ML310e Gen8 v2 server is a cheap low entry server, it’s been a fantastic team member of my homelab.

- HPE Dual 10G Port 560SFP+ adapters can be found brand new in unsealed boxes on eBay at very attractive prices. Using HPE Parts inside of HPE Servers, avoids the fans from spinning up fast.

- The ML310e Gen8 v2 has some issues with passing through PCIe cards to ESXi. Works perfect when not passing through.

The new NVMe Storage Server

I decided to repurpose an HPE Proliant ML310e Gen8 v2 Server. This server was originally acting as my Nvidia Grid K1 VDI server, because it supported large PCIe cards. With the addition of my new AMD S7150 x2 hacked in/on to one of my DL360p Gen8’s, I no longer needed the GRID card in this server and decided to repurpose it.

I installed the IOCREST IO-PEX40152 card in to the PCIe 16x slot, with 4 x 2TB Sabrent Rocket 4 NVME drives.

While the server has a PCIe 16x wide slot, it only has an 8x bus going to the slot. This means we will have half the capable speed vs the true 16x slot. This however does not pose a problem because we’ll be maxing out the 10Gb NICs long before we max out the 8x bus speed.

I also installed an HPE Dual Port 560SFP+ NIC in to the second slot. This will allow a total of 2 x 10Gb network connections from the server to the Ubiquiti UniFi US-16-XG 10Gb network switch, the backbone of my network.

The Server also have 4 x Hot Swappable HD bays on the front. When configured in HBA mode (via the BIOS), these are accessible by TrueNAS and can be used. I plan on populating these with 4 x 4TB HPE MDL SATA Hot Swappable drives to act as a replication destination for the NVMe pool and/or slower magnetic long-term storage.

I may also try to give WD RED Pro drives a try, but I’m not sure if they will cause the fans to speed up on the server.

TrueNAS Installation and Configuration

For the initial Proof-Of-Concept for version 2, I decided to be quick and dirty and install it to a USB stick. I also waited until I installed TrueNAS on to the USB stick and completed basic configuration before installing the Quad NVMe PCIe card and 10Gb NIC. I’m using a USB 3.0 port on the back of the server for speed, as I can’t verify if the port on the motherboard is USB 2 or USB 3.

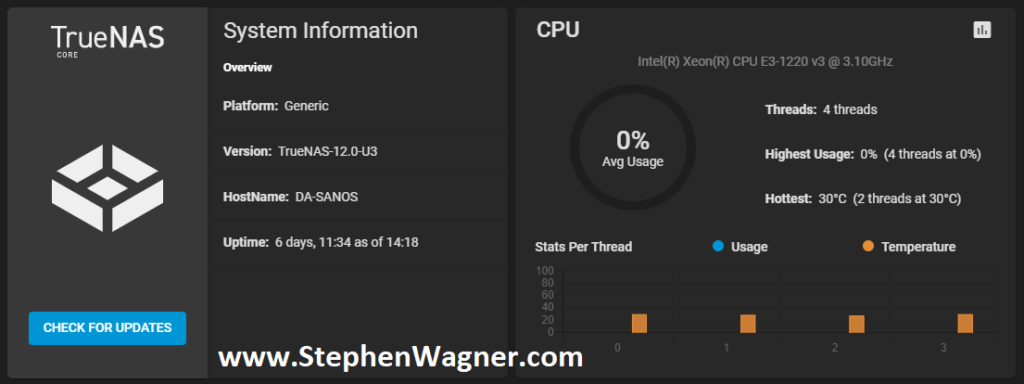

TrueNAS installation worked without any problems whatsoever on the ML310e. I configured the basic IP, time, accounts, and other generic settings. I then proceeded to install the PCIe cards (storage and networking).

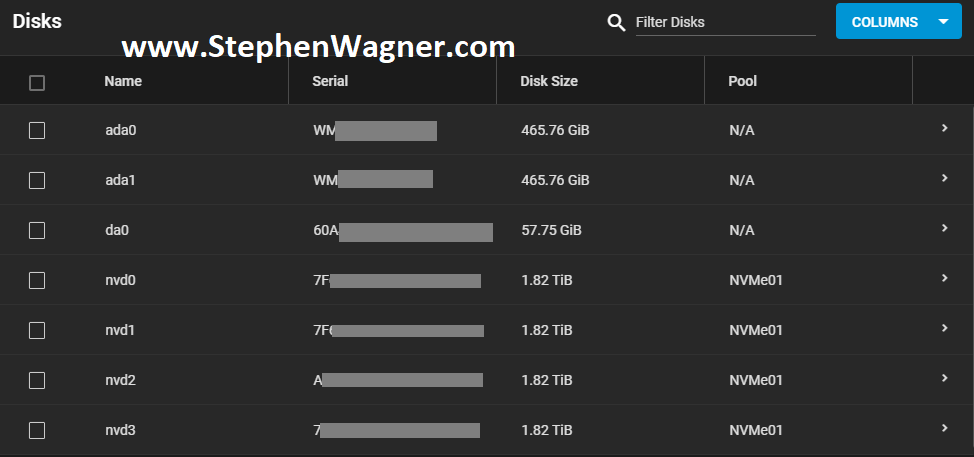

All NVMe drives were recognized, along with the 2 HDDs I had in the front Hot-swap bays (sitting on an HP B120i Controller configured in HBA mode).

The 560SFP+ NIC also was detected without any issues and available to configure.

Storage Configuration

I’ve already done some testing and created a guide on FreeNAS and TrueNAS ZFS Optimizations and Considerations for SSD and NVMe, so I made sure to use what I learned in this version of the project.

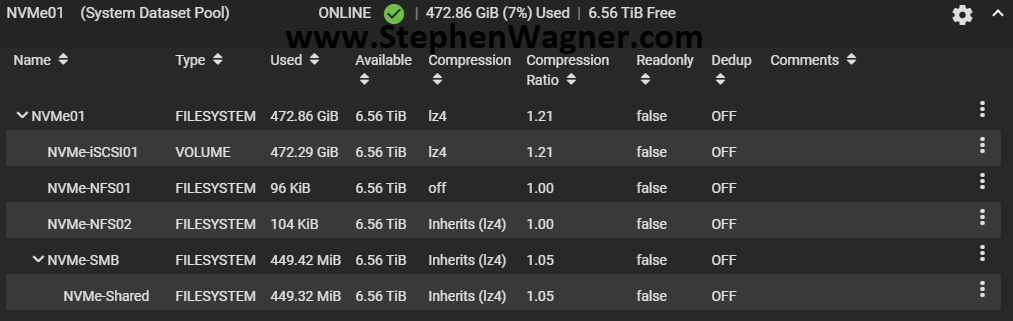

I created a striped pool (no redundancy) of all 4 x 2TB NVMe drives. This gave us around 8TB of usable high speed NVMe storage. I also created some datasets and a zVOL for iSCSI.

I chose to go with the defaults for compression to start with. I will be testing throughput and achievable speeds in the future. You should always test this in every and all custom environments as the results will always vary.

Network Configuration

Initial configuration was done via the 1Gb NIC connection to my main LAN network. I had to change this as the 10Gb NIC will be directly connected to the network backbone and needs to access the LAN and Storage VLANs.

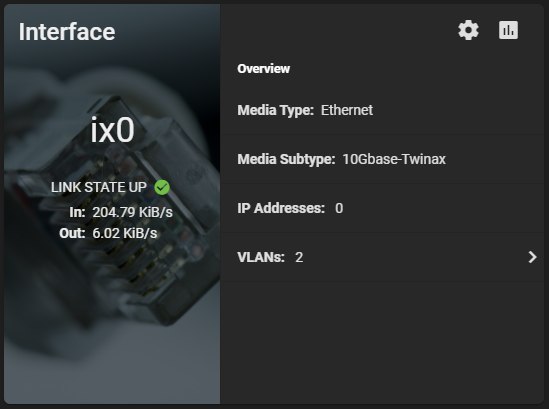

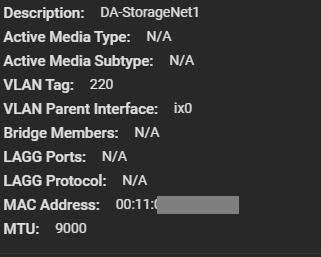

I went ahead and configured a VLAN Interface on VLAN 220 for the Storage network. Connections for iSCSI and NFS will be made on this network as all my ESXi servers have vmknics configured on this VLAN for storage. I also made sure to configure an MTU of 9000 for jumbo frames (packets) to increase performance. Remember that all hosts must have the same MTU to communicate.

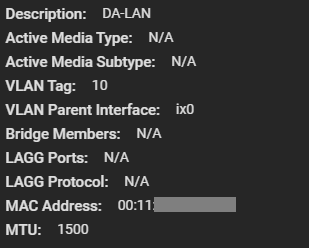

Next up, I had to create another VLAN interface for the LAN network. This would be used for management, as well as to provide Windows File Share (SMB/Samba) access to the workstations on the network. We leave the MTU on this adapter as 1500 since that’s what my LAN network is using.

As a note, I had to delete the configuration for the existing management settings (don’t worry, it doesn’t take effect until you hit test) and configure the VLAN interface for my LANs VLAN and IP. I tested the settings, confirmed it was good, and it was all setup.

At this point, only the 10Gb NIC is now being used so I went ahead and disconnected the 1Gb network cable.

Sharing Setup and Configuration

It’s now time to configure the sharing protocols that will be used. As mentioned before, I plan on deploying iSCSI, NFS, and Windows File Shares (SMB/Samba).

iSCSI and NFS Configuration

Normally, for a VMware ESXi virtualization environment, I would always usually prefer iSCSI based storage, however I also wanted to configure NFS to test throughput of both with NVMe flash storage.

Earlier, I created the datasets for all my my NFS exports and a zVOL volume for iSCSI.

Note, that in order to take advantage of the VMware VAAI storage directives (enhancements), you must use a zVOL to present an iSCSI target to an ESXi host.

For NFS, you can simply create a dataset and then export it.

For iSCSI, you need to create a zVol and then configure the iSCSI Target settings and make it available.

SMB (Windows File Shares)

I needed to create a Windows File Share for file based storage from Windows computers. I plan on using the Windows File Share for high-speed storage of files for video editing.

Using the dataset I created earlier, I configured a Windows Share, user accounts, and tested accessing it. Works perfect!

Connecting the host

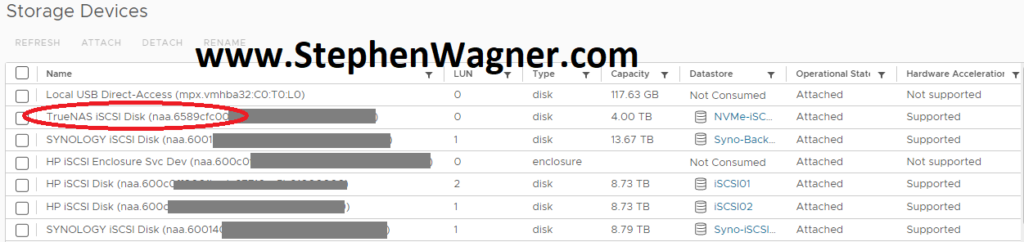

Connecting the ESXi hosts to the iSCSI targets and the NFS exports is done in the exact same way that you would with any other storage system, so I won’t be including details on that in this post.

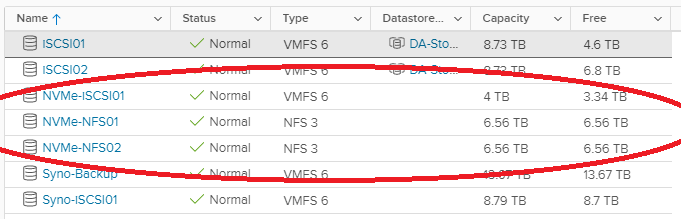

We can clearly see the iSCSI target and NFS exports on the ESXi host.

To access Windows File Shares, we log on and map the network share like you would normally with any file server.

Testing

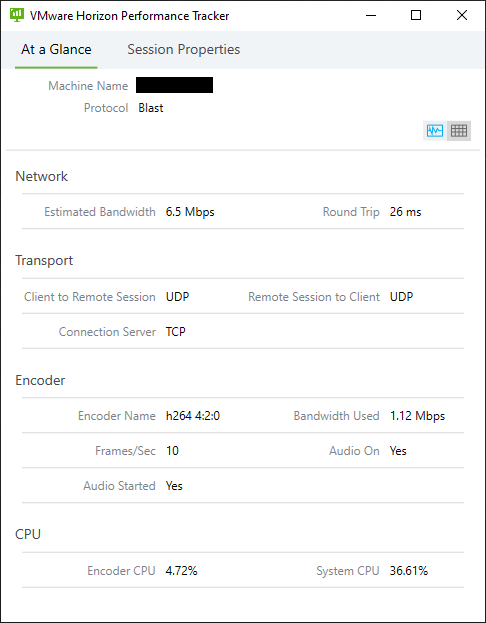

For testing, I moved (using Storage vMotion) my main VDI desktop to the new NVMe based iSCSI Target LUN on the NVMe Storage Server. After testing iSCSI, I then used Storage vMotion again to move it to the NFS datastore. Please see below for the NVMe storage server speed test results.

Speed Tests

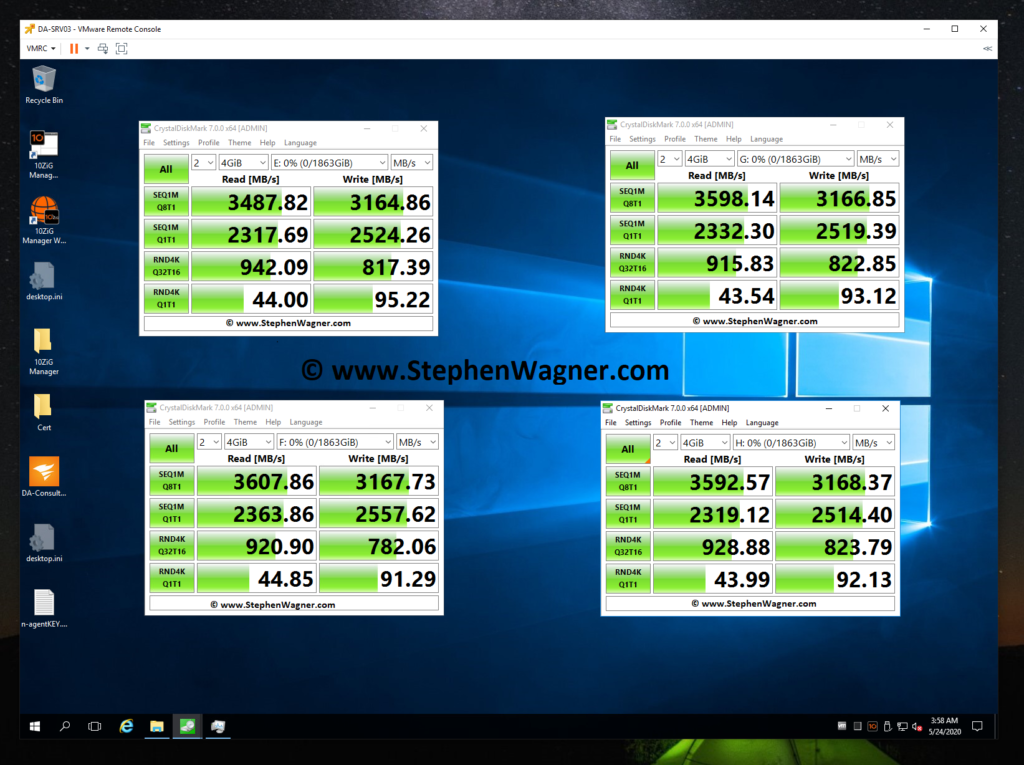

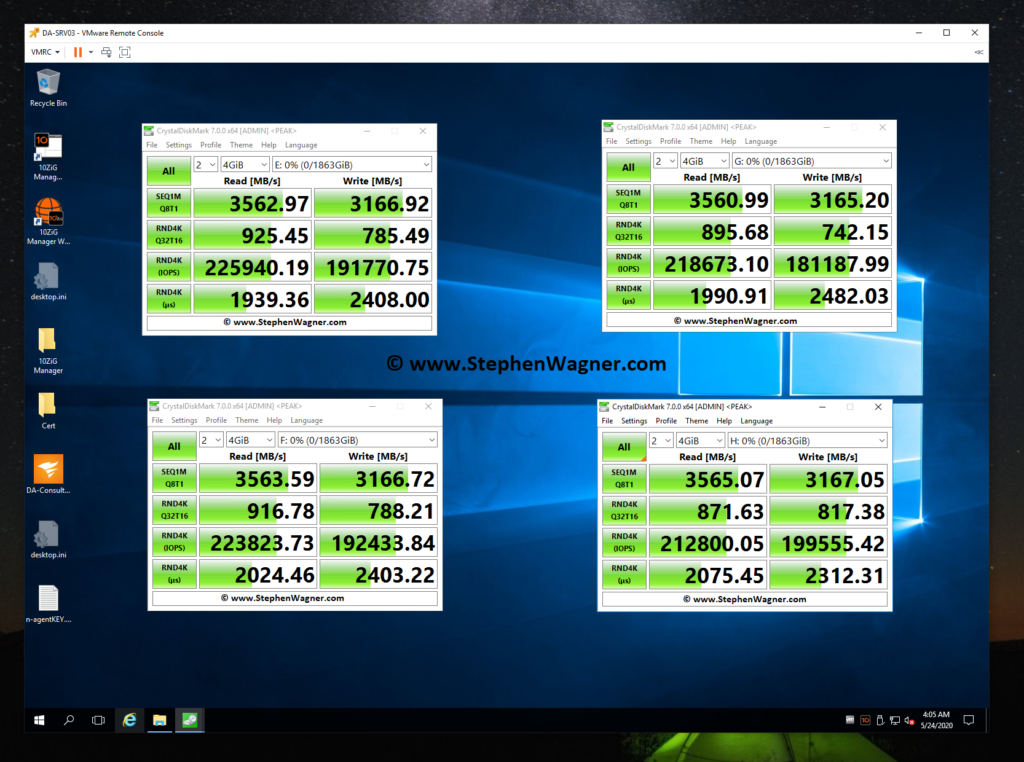

Just to start off, I want to post a screenshot of a few previous benchmarks I compiled when testing and reviewing the Sabrent Rocket 4 NVMe SSD disks installed in my HPE DL360p Gen8 Server and passed through to a VM (Add NVMe capability to an HPE Proliant DL360p Gen8 Server).

Note, that when I performed these tests, my CPU was maxed out and limiting the actual throughput. Even then, these are some fairly impressive speeds. Also, these tests were directly testing each NVMe drive individually.

Moving on to the NVMe Storage Server, I decided to test iSCSI NVMe throughput and NFS NVMe throughput.

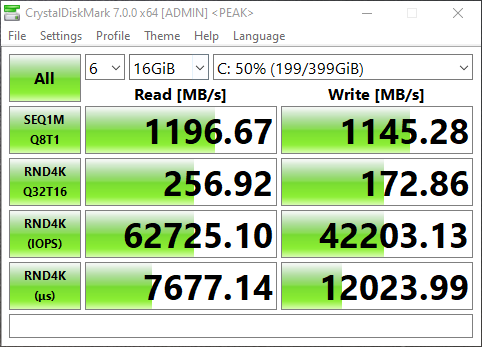

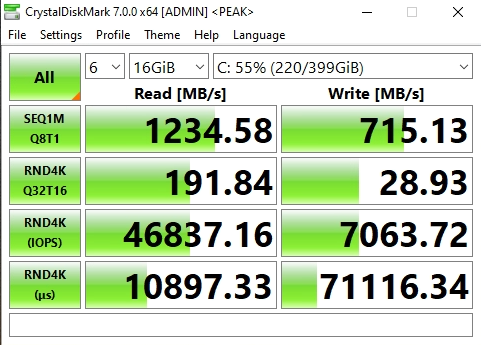

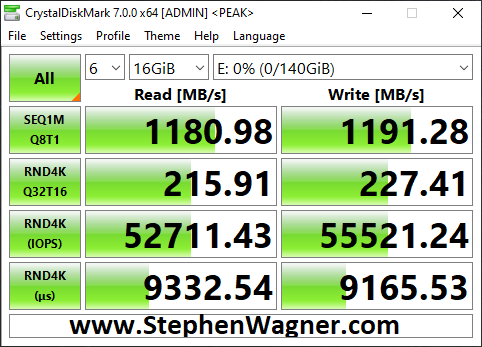

I opened up CrystalDiskMark and started a generic test, running a 16GB test file a total of 6 times on my VDI VM sitting on the iSCSI NVMe LUN.

You can see some impressive speeds maxing out the 10Gb NIC with crazy performance of the NVME storage:

- 1196MB/sec READ

- 1145.28MB/sec WRITE (Maxing out the 10GB NIC)

- 62,725.10 IOPS READ

- 42,203.13 IOPS WRITE

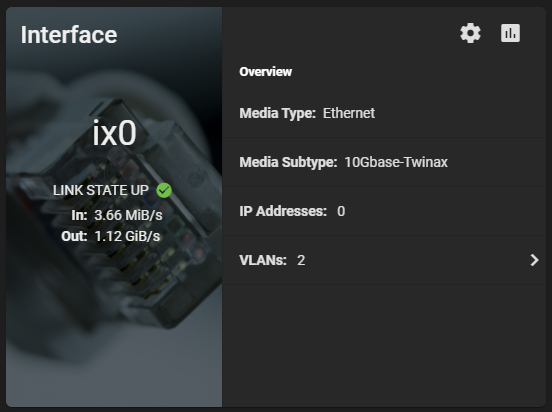

Additionally, here’s a screenshot of the ix0 NIC on the TrueNAS system during the speed test benchmark: 1.12 GiB/s.

And remember this is with compression. I’m really excited to see how I can further tweak and optimize this, and also what increases will come with configuring iSCSI MPIO. I’m also going to try to increase the IOPS to get them closer to what each individual NVMe drive can do.

Now on to NFS, the results were horrible when moving the VM to the NFS Export.

You can see that the read speed was impressive, but the write speed was not. This is partly due to how writes are handled with NFS exports.

Clearly iSCSI is the best performing method for ESXi host connectivity to a TrueNAS based NVMe Storage Server. This works perfect because we’ll get the VAAI features (like being able to reclaim space).

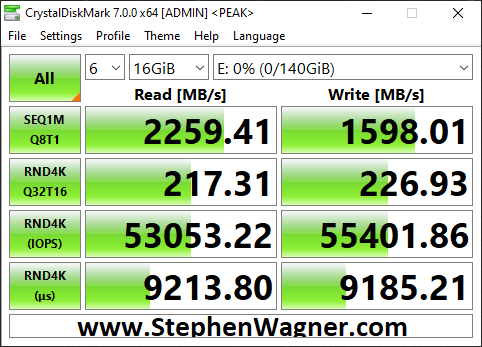

iSCSI MPIO Speed Test

This is more of an update… I was finally able to connect, configure, and utilize the 2nd 10Gbe port on the 560SFP+ NIC. In my setup, both hosts and the TrueNAS storage server all have 2 connections to the switch, with 2 VLANs and 2 subnets dedicated to storage. Check out the before/after speed tests with enabling iSCSI MPIO.

As you can see I was able to essentially double my read speeds (again maxing out the networking layer), however you’ll notice that the write speeds maxed out at 1598MB/sec. I believe we’ve reached a limitation of the CPU, PCIe bus, or something else inside of the server. Note, that this is not a limitation of the Sabrent Rocket 4 NVME drives, or the IOCREST NVME PCIe card.

Moving Forward

I’ve had this configuration running for around a week now with absolutely no issues, no crashes, and it’s been very stable.

Using a VDI VM on NVMe backed storage is lightning fast and I love the experience.

I plan on running like this for a little while to continue to test the stability of the environment before making more changes and expanding the configuration and usage.

Future Plans (and Configuration)

- Drive Bays

- I plan to populate the 4 hot-swappable drive bays with HPE 4TB MDL drives. Configured with RaidZ1, this should give me around 12TB usable storage. I can use this for file storage, backups, replication, and more.

- NVMe Replication

- This design was focused on creating non-redundant extremely fast storage. Because I’m limited to a total of 4 NVMe disks in this design, I chose not to use RaidZ and striped the data. If one NVMe drive is lost, all data is lost.

- I don’t plan on storing anything important, and at this point the storage is only being used for VDI VMs (which are backed up), and Video editing.

- If I can populate the front drive bays, I can replicate the NVMe storage to the traditional HDD storage on a frequent basis to protect against failure to some level or degree.

- Version 3 of the NVMe Storage Server

- More NVMe and Bigger NVMe – I want more storage! I want to test different levels of RaidZ, and connect to the backbone at even faster speeds.

- NVME Drives with PLP (Power Loss Prevention) for data security and protection.

- Dual Power Supply

Let me know your thoughts and ideas on this setup!