When using VMware vSAN 7.0 Update 3 (7U3) and using the graceful shutdown (and restart) of your entire vSAN cluster, you may experience an issue resulting with all VMs inaccessible after everything has been powered back on and the hosts taken out of maintenance mode.

If you experience this issue, you will also notice that your vSAN datastore appears to be empty (files and VMs), however you can see that there is data used on the datastore (data usage calculation).

The Problem

As of vSAN 7.0 Update 3, users can now gracefully shutdown and restart their entire vSAN cluster from the GUI instead of having to use the CLI/SSH. While you can still Manually Shut Down and Restart the vSAN Cluster, as one can expect if there’s any easy way to do it via the GUI, it’ll get used.

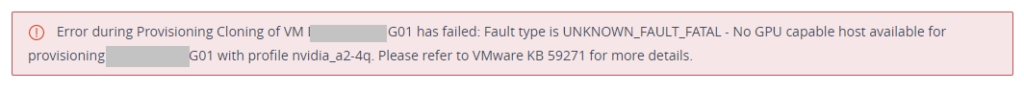

Last night I had a customer call who used this feature, and when bringing up their cluster, all the VMs were marked as inaccessible and the datastore appeared to be empty. What was even more odd is that all the vSAN health information pertaining to the disks looked good.

Connecting to troubleshoot this (with my limited experience with vSAN), I attempted the following:

- Restart vSAN Management Services on all ESXi Hosts

- Restart vSAN Health Services on the vCenter vCSA (then wait 15 minutes and restart ESXi vSAN Manage Services)

- Restart one of the ESXi hosts (to troubleshoot quorum)

- Troubleshoot Networking (Issues occurred after physical maintenance)

- Check MTUs

- Check LAGs (for vSAN Storage Network)

- Check Communication and Traffic

After doing all of the above, the VMs still were not accessible.

I had a feeling that this was related to the shutdown and restart (power on) process, so tried to manually start the vSAN cluster using the following command:

python /usr/lib/vmware/vsan/bin/reboot_helper.py recoverThis command returned numerous tracebacks, and ultimately timed out after reporting:

Recovery is not ready, retry after 10s...The Solution

I was convinced this was related to a bug in the automated scripts, so after adjusting my searching, I came across a VMware KB providing information on How to handle inconsistent cluster power status in vSAN shutdown workflow.

I was convinced this would help our issue, however the KB didn’t exactly describe the symptoms and errors we had. Scenario 3 was close, but symptoms were not exact.

At this point, I initiated a VMware Support ticket with VMware GSS, who after checking, confirmed it was the issue in the KB.

The Shutdown script sets “DOMPauseAllCCPs” to 1 (pausing all functions), and “IgnoreClusterMemberListUpdates” to 1. When you choose to Restart and Power on the cluster, these get set back to 0.

In our case, “IgnoreClusterMemberListUpdates” was set back to 0 during the restart and power on, however “DOMPauseAllCCPs” was still set to 1.

After setting DOMPauseAllCCPs” to “0” on all hosts, the VM’s were immediately accessibly, and the issue was resolved.

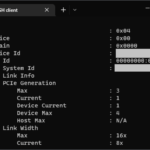

To check these variables:

esxcfg-advcfg -g /VSAN/DOMPauseAllCCPs

esxcfg-advcfg -g /VSAN/IgnoreClusterMemberListUpdatesTo set these variables (to undo what the shutdown script did):

esxcfg-advcfg -s 0 /VSAN/DOMPauseAllCCPs

esxcfg-advcfg -s 0 /VSAN/IgnoreClusterMemberListUpdatesWhen checking or setting these, you must do it on all vSAN nodes (ESXi hosts) in the vSAN Cluster.