Around a month ago I decided to turn on and start utilizing NFS v4.1 (Version 4.1) in DSM on my Synology DS1813+ NAS. As most of you know, I have a vSphere cluster with 3 ESXi hosts, which are backed by an HPE MSA 2040 SAN, and my Synology DS1813+ NAS.

The reason why I did this was to test the new version out, and attempt to increase both throughput and redundancy in my environment.

If you’re a regular reader you know that from my original plans (post here), and than from my issues later with iSCSI (post here), that I finally ultimately setup my Synology NAS to act as a NFS datastore. At the moment I use my HPE MSA 2040 SAN for my hot storage, and I use the Synology DS1813+ for cold storage. I’ve been running this way for a few years now.

Why NFS?

Some of you may ask why I chose to use NFS? Well, I’m an iSCSI kinda guy, but I’ve had tons of issues with iSCSI on DSM, especially MPIO on the Synology NAS. The overhead was horrible on the unit (result of the lack of hardware specs on the NAS) for both block and file access to iSCSI targets (block target, vs virtualized (fileio) target).

I also found a major issue, where if one of the drives were dying or dead, the NAS wouldn’t report it as dead, and it would bring the iSCSI target to a complete halt, resulting in days spending time finding out what’s going on, and then finally replacing the drive when you found out it was the issue.

After spending forever trying to tweak and optimize, I found that NFS was best for the Synology NAS unit of mine.

What’s this new NFS v4.1 thing?

Well, it’s not actually that new! NFS v4.1 was released in January 2010 and aimed to support clustered environments (such as virtualized environments, vSphere, ESXi). It includes a feature called Session trunking mechanism, which is also known as NFS Multipathing.

We all love the word multipathing, don’t we? As most of you iSCSI and virtualization people know, we want multipathing on everything. It provides redundancy as well as increased throughput.

How do we turn on NFS Multipathing?

According to the VMware vSphere product documentation (here)

While NFS 3 with ESXi does not provide multipathing support, NFS 4.1 supports multiple paths.

NFS 3 uses one TCP connection for I/O. As a result, ESXi supports I/O on only one IP address or hostname for the NFS server, and does not support multiple paths. Depending on your network infrastructure and configuration, you can use the network stack to configure multiple connections to the storage targets. In this case, you must have multiple datastores, each datastore using separate network connections between the host and the storage.

NFS 4.1 provides multipathing for servers that support the session trunking. When the trunking is available, you can use multiple IP addresses to access a single NFS volume. Client ID trunking is not supported.

So it is supported! Now what?

In order to use NFS multipathing, the following must be present:

- Multiple NICs configured on your NAS with functioning IP addresses

- A gateway is only configured on ONE of those NICs

- NFS v4.1 is turned on inside of the DSM web interface

- A NFS export exists on your DSM

- You have a version of ESXi that supports NFS v4.1

So let’s get to it! Enabling NFS v4.1 Multipathing

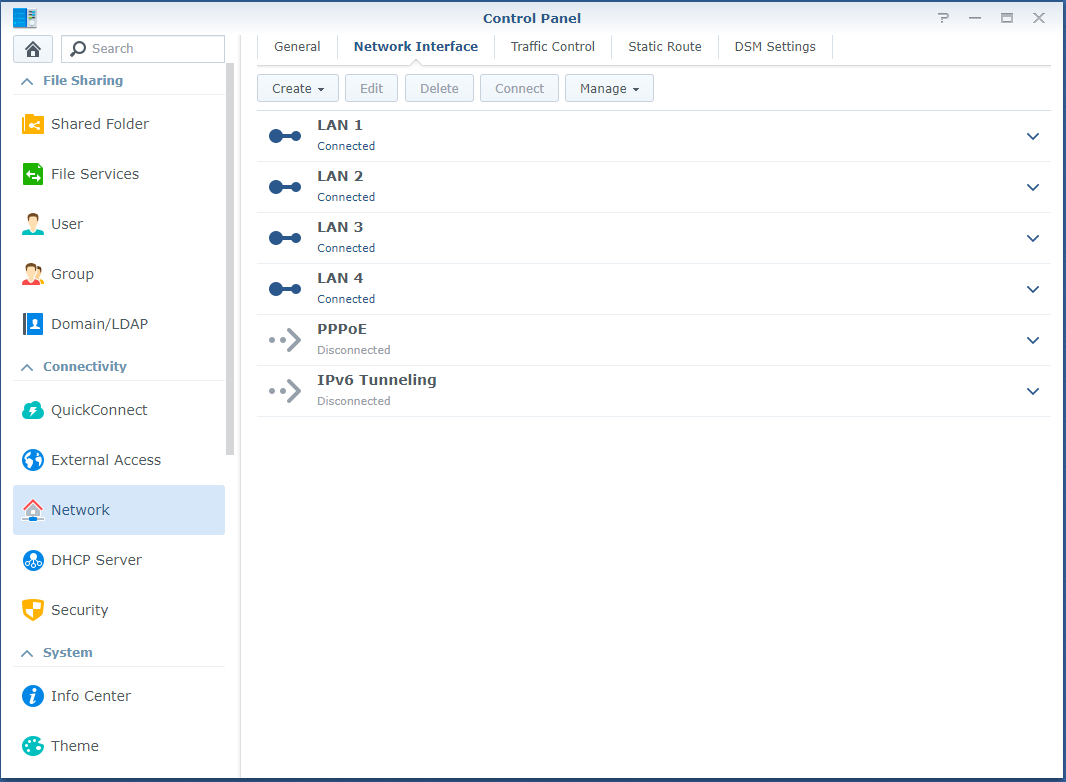

- First log in to the DSM web interface, and configure your NIC adapters in the Control Panel. As mentioned above, only configure the default gateway on one of your adapters.

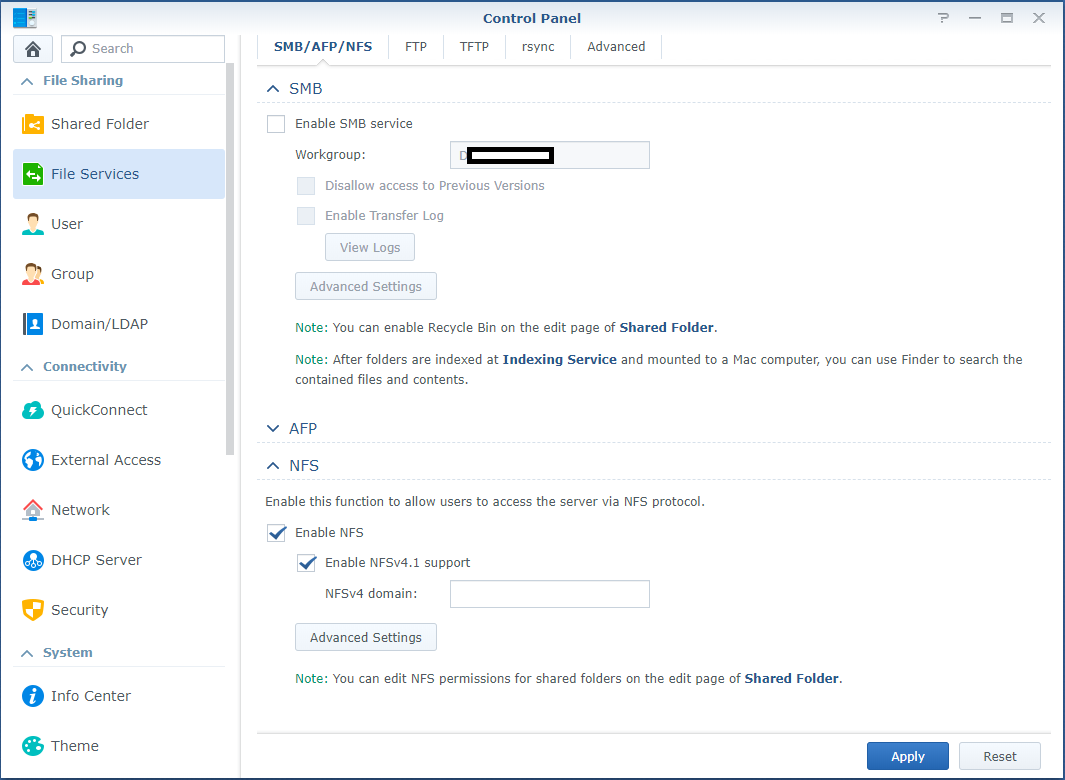

- While still in the Control Panel, navigate to “File Services” on the left, expand NFS, and check both “Enable NFS” and “Enable NFSv4.1 support”. You can leave the NFSv4 domain blank.

- If you haven’t already configured an NFS export on the NAS, do so now. No further special configuration for v4.1 is required other than the norm.

- Log on to your ESXi host, go to storage, and add a new datastore. Choose to add an NFS datastore.

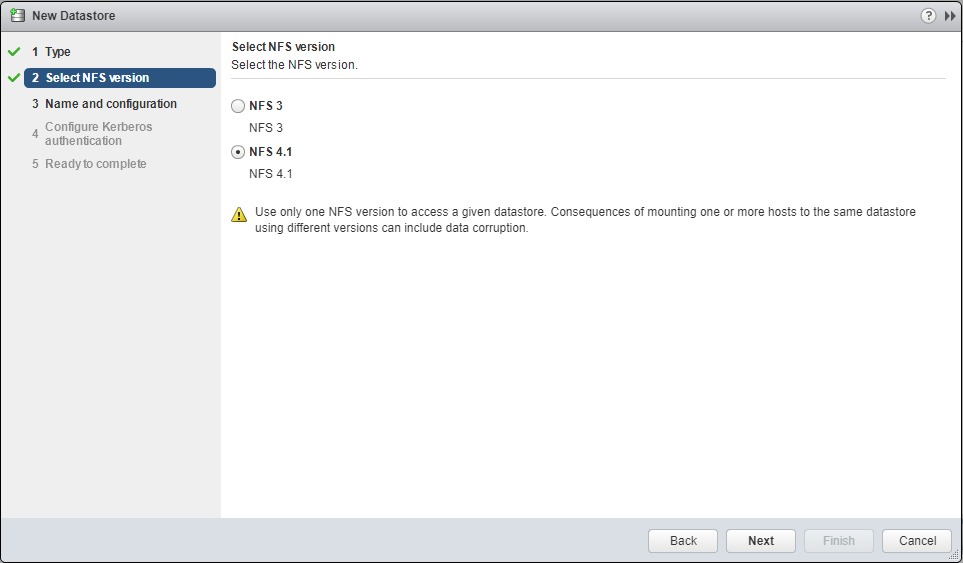

- On the “Select NFS version”, select “NFS 4.1”, and select next.

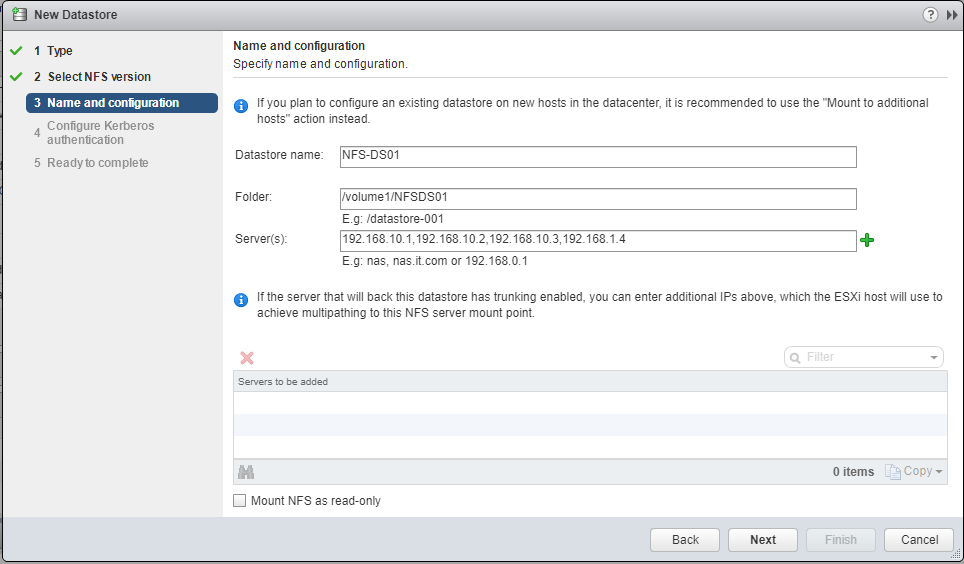

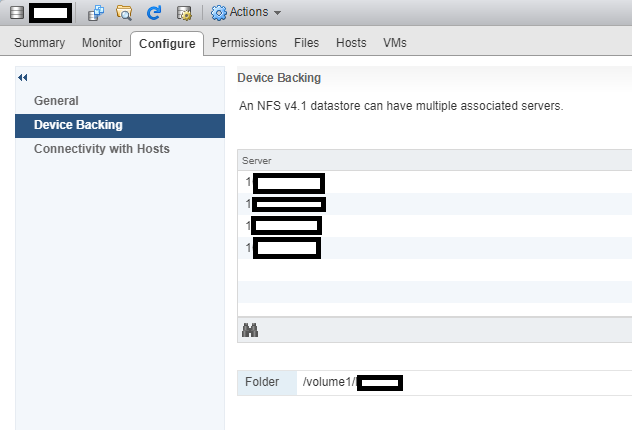

- Enter the datastore name, the folder on the NAS, and enter the Synology NAS IP addresses, separated by commas. Example below:

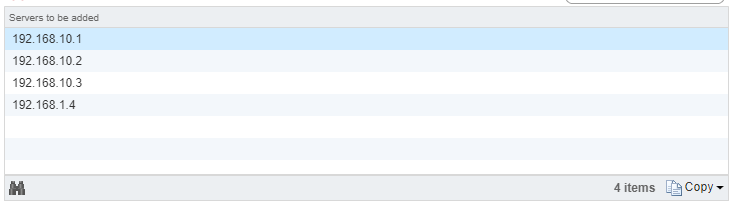

- Press the Green “+” and you’ll see it spreads them to the “Servers to be added”, each server entry reflecting an IP on the NAS. (please note I made a typo on one of the IPs).

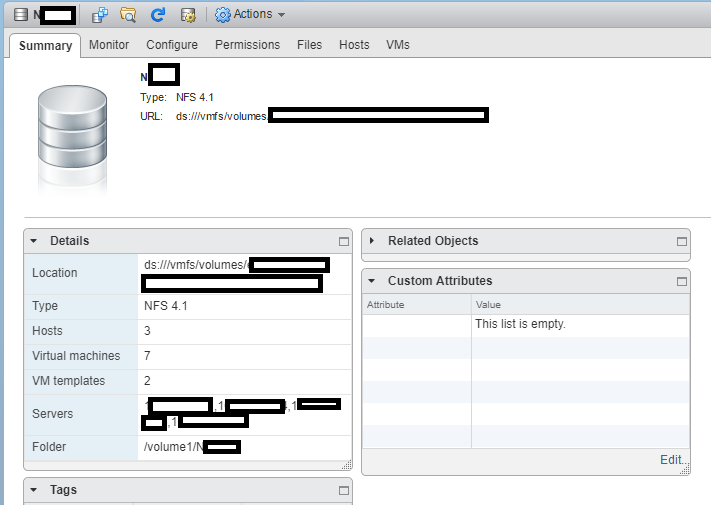

- Follow through with the wizard, and it will be added as a datastore.

That’s it! You’re done and are now using NFS Multipathing on your ESXi host!

In my case, I have all 4 NICs in my DS1813+ configured and connected to a switch. My ESXi hosts have 10Gb DAC connections to that switch, and can now utilize it at faster speeds. During intensive I/O loads, I’ve seen the full aggregated network throughput hit and sustain around 370MB/s.

After resolving the issues mentioned below, I’ve been running for weeks with absolutely no problems, and I’m enjoying the increased speed to the NAS.

Additional Important Information

After enabling this, I noticed that RAM and Memory usage had drastically increased on the Synology NAS. This would peak when my ESXi hosts would restart. This issue escalated to the NAS running out of memory (both physical and swap) and ultimately crashing.

After weeks of troubleshooting I found the processes that were causing this. While the processes were unrelated, this issue would only occur when using NFS Multipathing and NFS v4.1. To resolve this, I had to remove the “pkgctl-SynoFinder” package, and disable the services. I could do this in my environment because I only use the NAS for NFS and iSCSI. This resolved the issue. I created a blog post here to outline how to resolve this. I also further optimized the NAS and memory usage by disabling other unneeded services in a post here, targeted for other users like myself, who only use it for NFS/iSCSI.

Leave a comment and let me know if this post helped!

[…] Synology DSM NFS v4.1 Multipathing […]

[…] Synology DSM NFS v4.1 Multipathing […]

Dear Stephan,

I try to get the ESXi setup for this, but when i put two VMkernels with different subnets up in a vSwitch and two physical adapters connected ESXi. The performance is really bad. When ESXi is connected with one subnet performance is great. Do you have an example how to set up the Multipath NFS on the ESXi side.

That would be great.

Thanks Atze

I have DS1816 connected to ESX with 2 LANs (2x 1Gb – dedicated subnet for NFS) to a 10Gb (cisco sg350 switch and the ESX 6.7U3 host with 10Gb.

I tried with raid 5 (4x WD RED), raid 0 (2x WD RED), raid 10 (6x WD RED 4TB). I cannot get the performance over 100MB/s. (read or write with NFS 4.1 multipathing)

I see both network cards on NAS have traffic, so multipathing works. I expected at least 200MB/s but it never goes past 100.

I do not understand why. Are the disks to slow? I expected RAID10 would handle at least 2x Gbit LAN.

I tested on a VM or copying from local datastore to nas datastore. Similar results.

Hi

Update: tried with NFS3. No change. Single link can go to about 70MB/s (to 2 different NFS datastores). So network can hande more (140 combined).

RAID0 (4x WD RED) same result. No RAID (only 1 drive) same result. Seems like the disks are so slow? Would RED pros be faster?

Thanks

You need to make sure that each logical path is also a physical path and 1 ip cannot talk down more than one nic.

Lets say you have a single synology with 4 nics. I use 2 for NFS 4.1.

Put each nic on its own subnet for example

nic1 10.10.0.100

nic2 10.11.0.100

subnet mask 255.255.0.0.

Create 2 vswitches each with their own nic and vmkernal.

Switch 1 vmk0 physical nic1. 10.10.1.100

Switch 2 vmk1 physical nic2. 10.11.1.100

Set up permissions on the NFS share on the synology for both IPs.

When you set up the share on the esx box make sure you put both ips with a comma between them.

Like 10.10.0.100, 10.11.0.100.

You can install the NFS vib to get hardware acceleration as well.

I would attach some screen shots of my but I am in the middle of standing up a new cluster with distributed switches. I have been putting off learning them for awhile. I still thing the vcenter web interface is illogical and horribly organized.

My setup.

2 Dell r720s (retired)

2 Dell r730s 12 total nics each.

Synology DS 1815+, 1817+ each with 8 8tb drives.

2 48 port switches. (Not stacked)

r730s have 2 10g between them 1 for vmotion with vmotion stack and 1 for provisioning with that stack.

Once I get everything set back up I will post some pictures of the thruput.

I did have problems with iscsi as well. Synologys implementation is crap. Their tech support doesnt know how to troubleshoot much. I can fix things faster than they can.

The hardware is expensive for what it is but it runs well, I can saturate all links at 1G each and the software is nice. I also run some DSM Virtual machines.

hi.

All this did not help, also tried LCAP bond on synology instead of multi-path NFS.

Now after upgrading both ESX servers (HP ESX image) and after reboot it looks like it is working fine. 150-200MB/s when migrating a VM from local to NAS datastore.

Setup:

2x ESX (6.7U3) server DL380g10 (1x 10G NIC) connected to a SG350XG

1st vmk 192.168.100.X/24

2nd vmk 192.168.101.X/24(same NIC)

1x DS1618+ (2x NIC for NFS) connected to same switch

LAN2: 192.168.100.X/24

LAN3: 192.168.101.X/24

Hi sebek,

To achieve multipathing you need to either use NFS 4.1 or iSCSI with MPIO. You cannot use LACP with these or they won’t work.

Using LACP only aggregates network connections and cannot distribute a single established connection stream over multiple links. This might enhance the performance of multiple hosts accessing your NAS, but a single host won’t enjoy any additional performance.

Stephen

Here is what it looks like when it is running properly. Good times!! distributed switches are running.

https://user-images.githubusercontent.com/6221410/81994361-45eb2100-960d-11ea-9dda-9fe3f203c968.png

Very clear explications. Thank you.

Hi, I’m trying to follow the above configuration (I have two VMkernel ports in their own VLANs (10.0.1.3, 10.0.2.3 – DS1515+ uses 10.0.1.99 and 10.0.2.99 respectively. Two different standard vSwitches with respective port groups using VLAN tags.

I can mount a NFS4.1 share using the 192.168.1.0/24 network no problem (this is the default network with gateway) and it mounts OK without problem. When I try and mount this using 4.1 it failes – vmkernel log shows the following:

2021-02-04T16:04:21.324Z cpu2:2100490 opID=7d10e2f3)NFS41: NFS41_VSIMountSet:423: Mount server: 10.0.1.99,10.0.2.99, port: 2049, path: /volume1/BTRFS-1TB-NFS, label: SYN-BTRFS-1TB-NFS, security: 1 user: , options:

2021-02-04T16:04:21.324Z cpu2:2100490 opID=7d10e2f3)StorageApdHandler: 977: APD Handle Created with lock[StorageApd-0x430df05aae10]

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)WARNING: NFS41: NFS41FSGetRootFH:4327: Failed to get the pseudo root for volume SYN-BTRFS-1TB-NFS: Not found

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)WARNING: NFS41: NFS41FSCompleteMount:3868: NFS41FSGetRootFH failed: Not found

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)WARNING: NFS41: NFS41FSDoMount:4510: First attempt to mount the filesystem failed: The NFS server denied the mount request

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)WARNING: NFS41: NFS41_FSMount:4807: NFS41FSDoMount failed: The NFS server denied the mount request

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)StorageApdHandler: 1063: Freeing APD handle 0x430df05aae10 []

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)StorageApdHandler: 1147: APD Handle freed!

2021-02-04T16:04:21.425Z cpu0:2100490 opID=7d10e2f3)WARNING: NFS41: NFS41_VSIMountSet:431: NFS41_FSMount failed: The NFS server denied the mount request

The weird thing is, I can mount this using NFS3 and a single IP OK. I have gone through the NFS permissions and ended up adding ‘*’ but still the same issue.

Any idea/help would be very much appreciated!!

Hi James,

You said you mount using different methods, you didn’t mention the different methods themselves. Could you explain what you’re doing differently? Or is it when you specify a second IP?

Did you enable NFS 4.1 fully on the Synology NAS?

Cheers,

Stephen

Following the example laid out by commenter anthony m on: 04/21/2020 at 5:38 PM, I was still unable to get the NFS datastore mounted hosted on the Synology. I was seeing in the /var/log/vmkernel.log: “WARNING: SunRPC: 3913: fail all pending calls for client 0x4306b0b1b100 IP 20.0.0.10.8.1 (socket disconnected)” and “WARNING: NFS41: NFS41FSWaitForCluster:3695: Failed to wait for the cluster to be located: Timeout.” and “WARNING: NFS41: NFS41_VSIMountSet:431: NFS41_FSMount failed: Timeout”.

I had tried to disable ARP Attack protection on the HP switch that both the Synology (2 nics on 20.0.0.10/24 and 20.0.1.11/24) and the ESXI host (two nics on 20.0.0.20/24 on vmk1 aka vmnic4 and on 20.0.1.21/24 on vmk2 aka vmnic5) were connected to. I could ping from the ESXi host to the Synology IPs (esxcli network diag ping –netstack=NFSStorage_Stack –host 20.0.1.11) and from the Synology to the ESXi host (sudo ping 20.0.1.21) but everytime I did a NFS mount command (esxcli storage nfs41 add -H 20.0.0.10,20.0.1.11 -s /volume2/vmwNFS-1 -v NFSDatastore), i would get a one minute pause and then “Unable to complete Sysinfo operation. Please see the VMkernel log file for more details.: Sysinfo error: TimeoutSee VMkernel log for details.”.

The Synology NFS export was working properly with sys security, and the Synology NFS permissions (NFS rule) being explicitly set to the exact IPs of the ESXi host and squash set to No mapping. And I was already using NFS to backup vcenter (setup via vCenter Server Appliance Management Interface).

Taking the HP switch out of the equation yielded the same results so not a DOS problem. I realized that, by default, nfs mounts are done via the default tcp/ip stack, but I had set the vmk1 and vmk2 to use a custom TCP/IP stack to isolate NFS traffic from the rest of the esxi host, I was able to start searching for others who were having problems with NFS and custom stacks. That lead to https://communities.vmware.com/ and the post “NFS storage won’t connect over custom TCP/IP stack” which lead to https://kb.vmware.com/s/article/50112854 “NFS fails when using custom TCP/IP stack” which details how to get the SunRPC module to use a custom tcp/ip stack.

Now I can NFS mount datastores over NFSv4.1 on ESXi 6.7 host and a Synolgy running DSM 6.2.3x

hi James, I had similar problem with SAN from HP P2000 G3 iSCSI. It was caused by timeout. Try to use one VLAN (same for all paths) or without VLAN.

Synology DSM 7 no longer supports NFS4.1. Only up to 4. How do you convert existing NFS mounts from 4.1 to 3 in vSphere? My current 4.1 mount shows as inaccessible and cannot be removed so I can’t unmount and remount as NFS3.

Hi Matt,

There may be a way to enable 4.1 from the console but it won’t be supported.

You may need to use the esxcli to remove the old datastores and then remount as NFS3 to resolve the issue.

That did the trick!

The ESXi hosts weren’t even showing any mount info in the CLI. Following the steps here allowed me to temporarily enable 4.1 long enough to unmount and remount as NFS3.

https://community.synology.com/enu/forum/1/post/144611

I’m glad to hear it worked out for you!